3D perception and SLAM using geometric and semantic information for mine inspection with quadruped robot

Supervisor: Linus Nwankwo, M.Sc.;

Univ.-Prof. Dr Elmar Rückert

Start date: As soon as possible

Theoretical difficulty: mid

Practical difficulty: high

Abstract

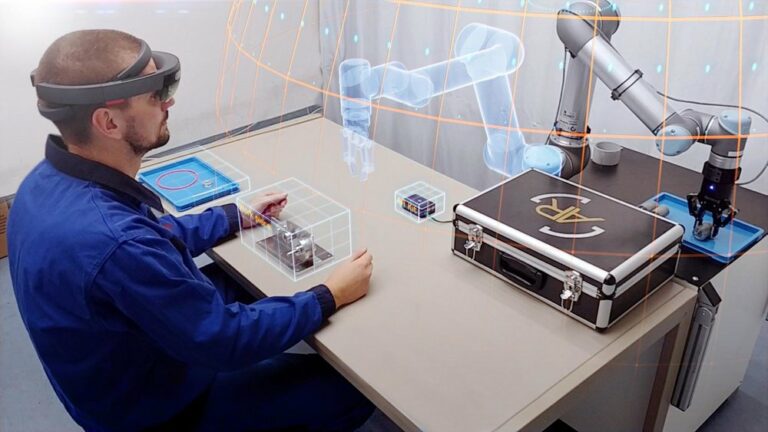

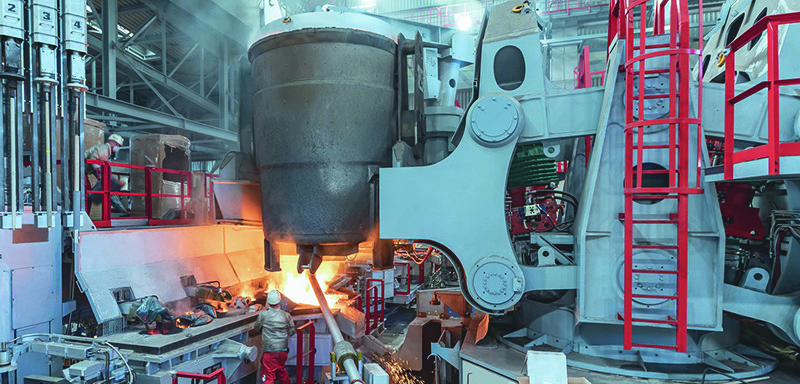

Unlike the traditional mine inspection approach, which is inefficient in terms of time, terrain, and coverage, this project/thesis aims to investigate novel 3D perception and SLAM using geometric and semantic information for real-time mine inspection.

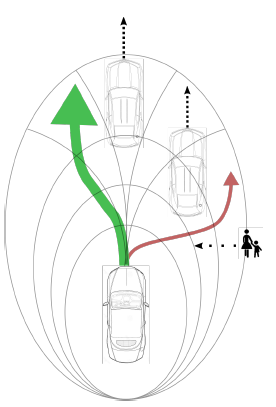

We propose to develop a SLAM approach that takes into account the terrain of the mining site and the sensor characteristics to ensure complete coverage of the environment while minimizing traversal time.

Tentative Work Plan

To achieve our objective, the following concrete tasks will be focused on:

-

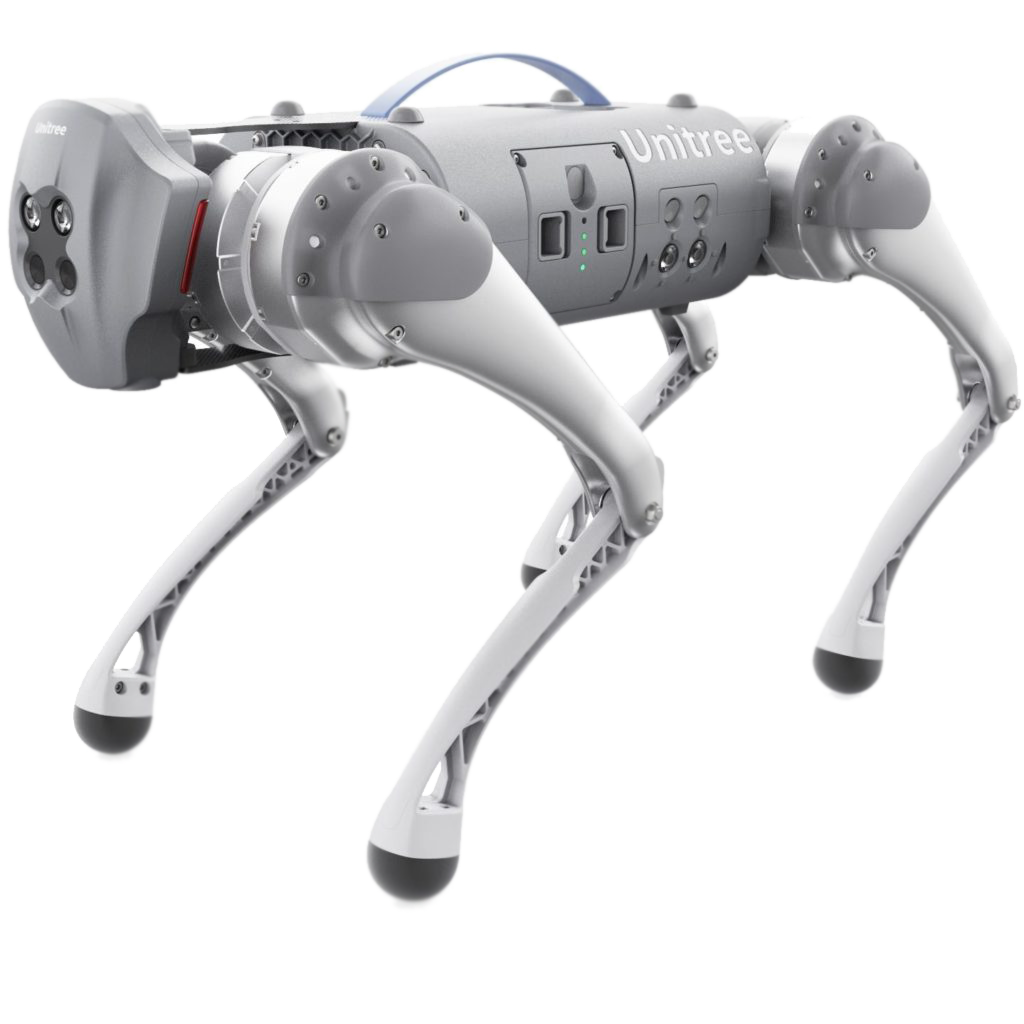

Study the concept of 3D perception and SLAM for mine inspection, as well as algorithm development, system integration and real-world demonstration using Unitree Go1 quadrupedal robot.

- Setup and familiarize with the simulation environment:

- Build the robot model (URDF) for the simulation (optional if you wish to use the existing one)

- Setup the ROS framework for the simulation (Gazebo, Rviz)

- Recommended programming tools: C++, Python, Matlab

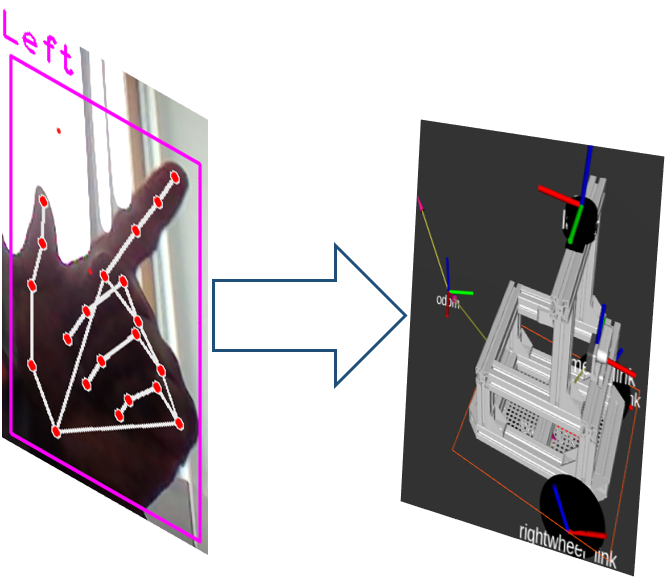

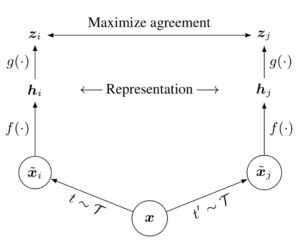

- Develop a novel SLAM system for the quadrupedal robot to navigate, map and interact with challenging real-world environments:

-

2D/3D mapping in complex indoor/outdoor environments

-

Localization using either Monte Carlo or extended Kalman filter

-

Complete coverage path-planning

-

- Intermediate presentation:

- Presenting the results of the literature study

- Possibility to ask questions about the theoretical background

- Detailed planning of the next steps

-

Implementation:

-

Simulate the achieved results in a virtual environment (Gazebo, Rviz, etc.)

-

Real-time testing on Unitree Go1 quadrupedal robot.

-

- Evaluate the performance in various challenging real-world environments, including outdoor terrains, urban environments, and indoor environments with complex structures.

- M.Sc. thesis or research paper writing (optional)

Related Work

[1] Wolfram Burgard, Cyrill Stachniss, Kai Arras, and Maren Bennewitz , ‘SLAM: Simultaneous

Localization and Mapping’, http://ais.informatik.uni-freiburg.de/teaching/ss12/robotics/slides/12-slam.pdf

[2] V.Barrile, G. Candela, A. Fotia, ‘Point cloud segmentation using image processing techniques for structural analysis’, The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Volume XLII-2/W11, 2019

[3] Łukasz Sobczak , Katarzyna Filus , Adam Domanski and Joanna Domanska, ‘LiDAR Point Cloud Generation for SLAM Algorithm Evaluation’, Sensors 2021, 21, 3313. https://doi.org/10.3390/ s21103313.