Multi-modale, taktile-visuelle Robotergreifsystem für industrielle Anwendungen (MUTAVIA)

Schlüsseltechnologien im produktionsnahen Umfeld 2024

FFG Projekt 03/2025-02/2028

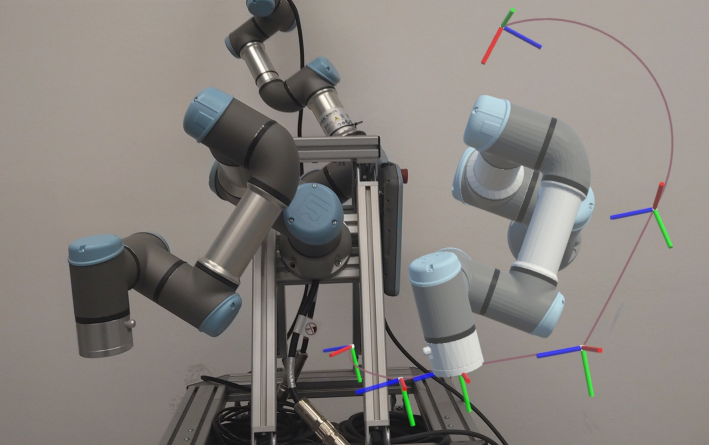

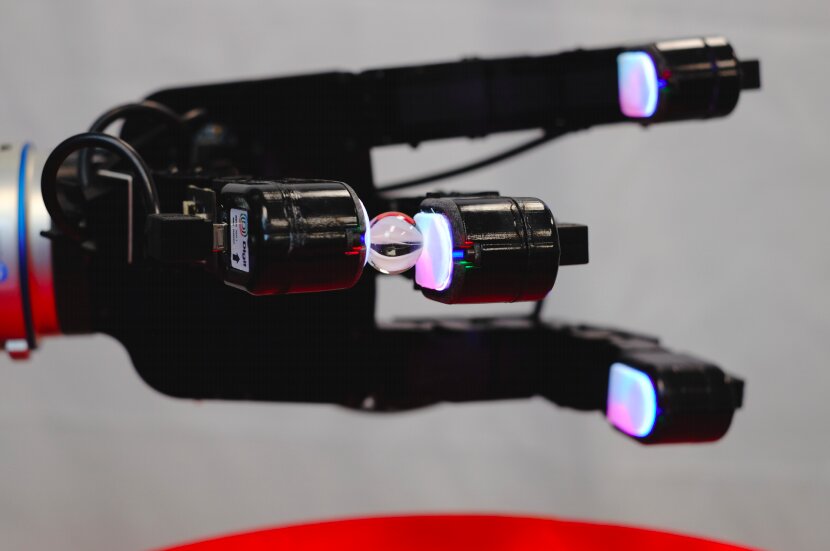

Unsere Forschung entwickelt intelligente Roboterhände, die fühlen können – ähnlich wie menschliche Hände. Mit innovativen Sensoren auf Basis von nachhaltigen Materialien wie Cellulose und Nanocellulose schaffen wir Lösungen, die nicht nur Druck und Temperatur spüren, sondern auch Schadstoffe und biologische Gefahren erkennen können.

Diese Technologie wird speziell für schwierige Aufgaben in der Industrie entwickelt, z. B. beim Sortieren von Abfällen oder empfindlichen Materialien, die bisher nur Menschen bewältigen konnten. Durch den Einsatz von Robotern in gefährlichen oder anstrengenden Arbeitsumfeldern schützen wir Menschen und verbessern die Arbeitsbedingungen.

Mit unseren nachhaltigen, langlebigen und präzisen Sensoren setzen wir neue Maßstäbe in der Automatisierung. Das stärkt nicht nur die heimische Wirtschaft, sondern bringt auch Innovation und Sicherheit für die Gesellschaft.

Projekt Consortium

- Montanuniversität Leoben

- Forschungs- und Innovationsservice Montanuniversität (Administrativer Koordinator)

- Lehrstuhl für Automation und Messtechnik

- Lehrstuhl für Cyber-Physical-Systems

- FreyZein GmbH

- Saubermacher Dienstleistungs AG

Fördergeber

-

Österreichische Forschungsförderungsgesellschaft mbH (FFG)

Project Summary

Our research is developing intelligent robotic hands that can feel – just like human hands. Using innovative sensors based on sustainable materials such as cellulose and nanocellulose, we are creating solutions that not only sense pressure and temperature, but can also detect pollutants and biological hazards.

This technology is being developed specifically for difficult tasks in industry, such as sorting waste or sensitive materials that previously only humans could handle. By using robots in hazardous or strenuous working environments, we protect people and improve working conditions.

With our sustainable, durable and precise sensors, we are setting new standards in automation. This not only strengthens the domestic economy, but also brings innovation and safety to society.

Poster

TBA

Other

TBA