Supervisor: Linus Nwankwo, M.Sc.;

Univ.-Prof. Dr Elmar Rückert

Start date: 5th September 2022

Theoretical difficulty: mid

Practical difficulty: mid

Abstract

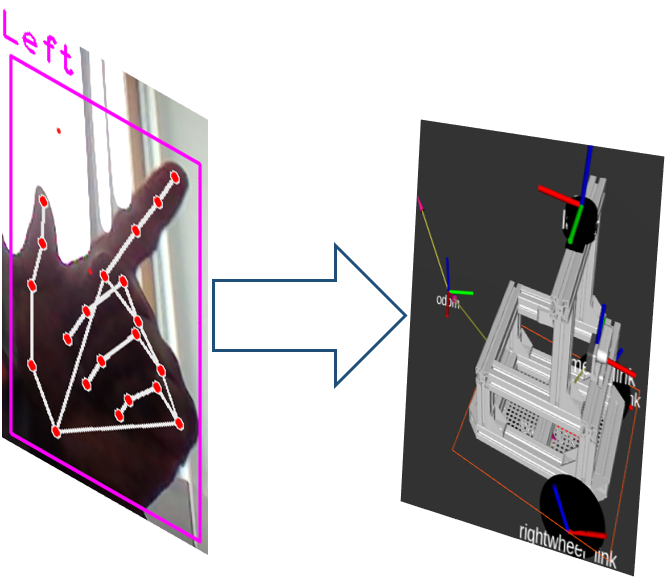

For over 20 years today, the simultaneous localisation and mapping (SLAM) approach has been widely used to achieve autonomous navigation objectives. The SLAM problem is the problem of building a map of the environment while simultaneously estimating the robot’s position relative to the map given noisy sensor observations and a series of control data. Recently, the

mapless-based approach with deep reinforcement learning has been proposed. For this approach, the agent (robot) learns the navigation policy given only sensor data and a series of control data without a prior map of the task environment. In the context of this thesis, we will evaluate the performance of both approaches in a crowded dynamic environment using our differential drive open-source open-shuttle mobile robot.

Tentative Work Plan

To achieve our objective, the following concrete tasks will be focused on:

- Literature research and a general understanding of the field

- mobile robotics and industrial use cases

- Overview of map-based autonomous navigation (SLAM & Path planning)

- Overview of mapless-based autonomous navigation approach with deep reinforcement learning

-

- Setup and familiarize with the simulation environment

- Build the robot model (URDF) for the simulation (optional if you wish to use the existing one)

- Setup the ROS framework for the simulation (Gazebo, Rviz)

- Recommended programming tools: C++, Python, Matlab

-

- Intermediate presentation:

- Presenting the results of the literature study

- Possibility to ask questions about the theoretical background

- Detailed planning of the next steps

-

- Define key performance/quality metrics for evaluation:

- Time to reach the desired goal

- Average/mean speed

- Path smoothness

- Obstacle avoidance/distance to obstacles

- Computational requirement

- success rate

- e.t.c

-

- Assessment and execution:

- Compare the results from both map-based and map-less approaches on the above-defined evaluation metrics.

-

- Validation:

- Validate both approaches in a real-world scenario using our open-source open-shuttle mobile robot.

-

- Furthermore, the following optional goals are planned:

- Develop a hybrid approach combining both the map-based and the map-less methods.

-

- M.Sc. thesis writing

- Research paper writing (optional)

Related Work

[1] Xue, Honghu; Hein, Benedikt; Bakr, Mohamed; Schildbach, Georg; Abel, Bengt; Rueckert, Elmar, “Using Deep Reinforcement Learning with Automatic Curriculum Learning for Mapless Navigation in Intralogistics“, In: Applied Sciences (MDPI), Special Issue on Intelligent Robotics, 2022.

[2] Han Hu; Kaicheng Zhang; Aaron Hao Tan; Michael Ruan; Christopher Agia; Goldie Nejat “Sim-to-Real Pipeline for Deep Reinforcement Learning for Autonomous Robot Navigation in Cluttered Rough Terrain”, IEEE Robotics and Automation Letters ( Volume: 6, Issue: 4, October 2021).

[3] Md. A. K. Niloy; Anika Shama; Ripon K. Chakrabortty; Michael J. Ryan; Faisal R. Badal; Z. Tasneem; Md H. Ahamed; S. I. Mo, “Critical Design and Control Issues of Indoor Autonomous Mobile Robots: A Review”, IEEE Access ( Volume: 9), February 2021.

[4] Ning Wang, Yabiao Wang, Yuming Zhao, Yong Wang and Zhigang Li , “Sim-to-Real: Mapless Navigation for USVs Using Deep Reinforcement Learning”, Journal of Marine Science and Engineering, 2022, 10, 895. https://doi.org/10.3390/jmse10070895

Master Thesis

The final master thesis document can be downloaded here.