B.Sc. Thesis – Gabriel Brinkmann: Simultaneous localization and mapping (SLAM) with a quadrupedal robot in challenging real-world environments

Supervisor: Linus Nwankwo, M.Sc.;

Univ.-Prof. Dr Elmar Rückert

Start date: 5th September 2022

Theoretical difficulty: mid

Practical difficulty: mid

Abstract

When observing animals in nature, navigation and walking seem like medial-side tasks. However, training robots to effectively achieve the same objective is still a challenging problem for roboticists and researchers. We aim to autonomously perform tasks like navigating traffic, avoiding obstacles, finding optimal routes, surveying human hazardous areas, etc with a quadrupedal robot. These tasks are useful in commercial, industrial, and military settings, including self-driving cars, warehouse stacking robots, container transport vehicles in ports, and load-bearing companions for military operations.

For over 20 years today, the SLAM approach has been widely used to achieve autonomous navigation, obstacle avoidance, and path planning objectives. SLAM is a crucial problem in robotics, where a robot navigates through an unknown environment while simultaneously creating a map of it. The SLAM problem is challenging as it requires the robot to estimate its pose (position and orientation) relative to the environment and simultaneously estimate the location of landmarks in the environment.

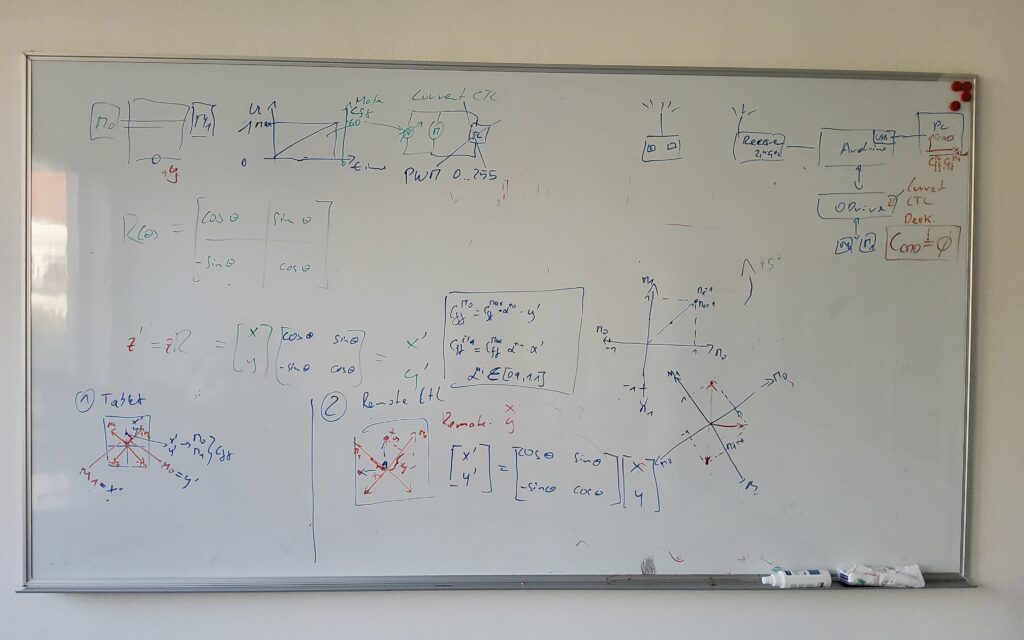

Some of the most common challenges with SLAM are the accumulation of localization errors over time, inaccurate pose estimation on a map, loop closure, etc. These problems have been partly overcome by using Pose Graphs for localization errors, Extended Kalman filters and Monte Carlos localization for pose estimation.

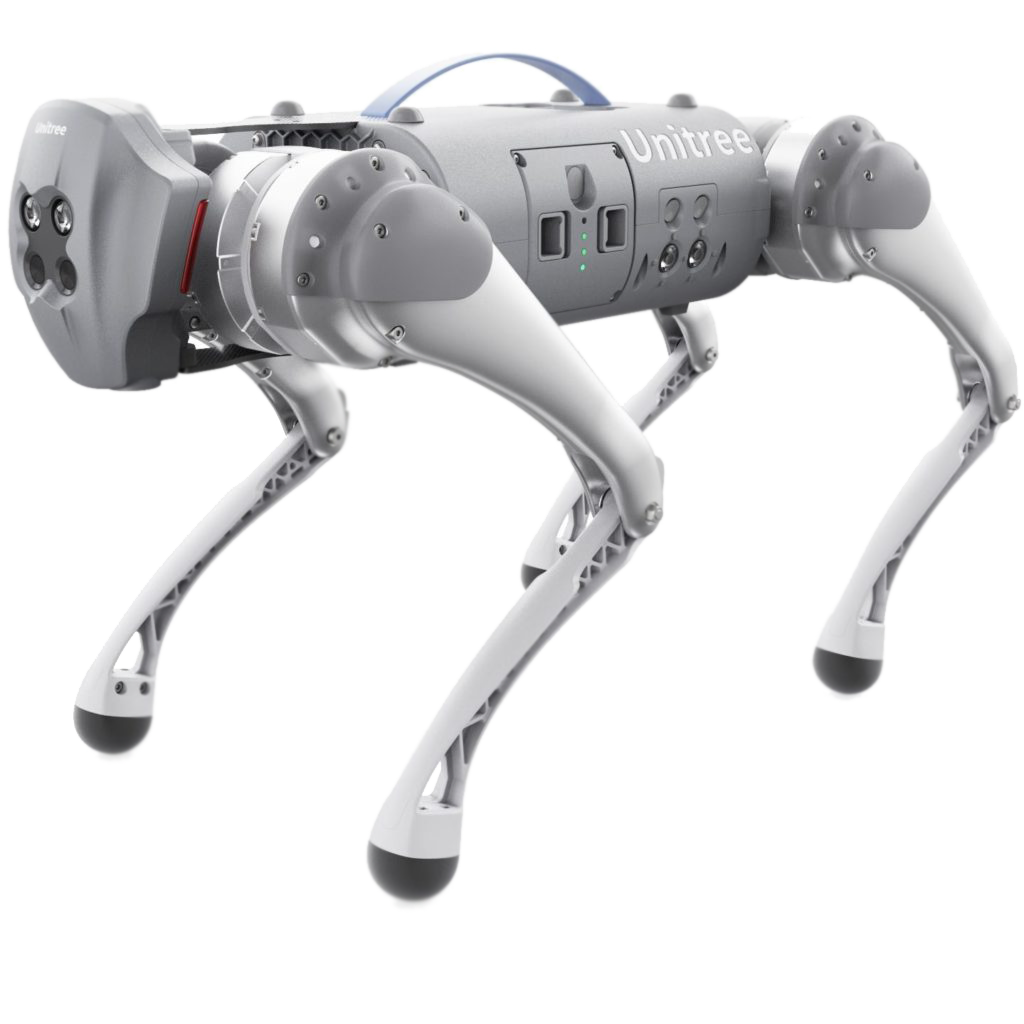

Quadrupedal robots are well-suited for challenging environments, where the surface conditions are non-uniform, e.g. in off-road environments or in warehouses where stairs or obstacles have to be overcome but have the difficulty of non-uniform dynamic movement which poses additional difficulty for SLAM.

In the context of this thesis, we propose to study the concept of SLAM with its associated algorithms and apply it to a quadrupedal robot (Unitree Go1). Our goal is to provide the robot with certain tasks and commands that it will then have to autonomously execute. For example, navigate rooms, avoid slow-moving objects, follow an object (person), etc.

Tentative Work Plan

To achieve our objective, the following concrete tasks will be focused on:

-

Study the concept of SLAM as well as its application in quadrupedal robots.

- Setup and familiarize with the simulation environment:

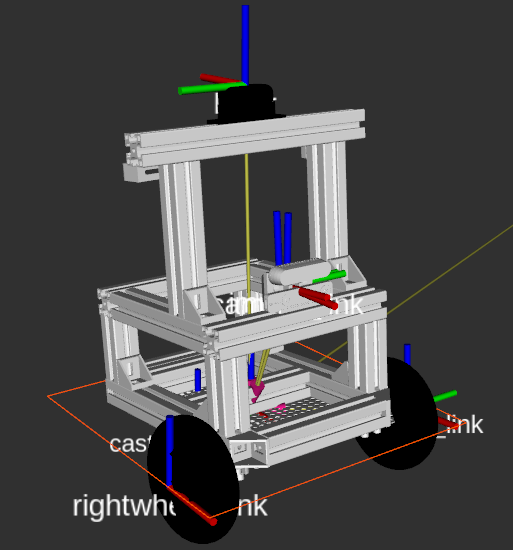

- Build the robot model (URDF) for the simulation (optional if you wish to use the existing one)

- Setup the ROS framework for the simulation (Gazebo, Rviz)

- Recommended programming tools: C++, Python, Matlab

- Develop a novel SLAM system for a quadrupedal robot to navigate and map challenging real-world environments:

-

2D/3D mapping in complex indoor/outdoor environments

-

Localization using either Monte Carlo or extended Kalman filter

-

Establish a path-planning algorithm

-

- Intermediate presentation:

- Presenting the results of the literature study

- Possibility to ask questions about the theoretical background

- Detailed planning of the next steps

-

Implementation:

-

Simulate the achieved results in a virtual environment (Gazebo, Rviz, etc.)

-

Real-time testing on Unitree Go1 quadrupedal robot.

-

- Evaluate the performance in various challenging real-world environments, including outdoor terrains, urban environments, and indoor environments with complex structures.

- B.Sc. thesis writing.

- Research paper writing (optional)

Related Work

[1] Wolfram Burgard, Cyrill Stachniss, Kai Arras, and Maren Bennewitz , ‘SLAM: Simultaneous

Localization and Mapping’, http://ais.informatik.uni-freiburg.de/teaching/ss12/robotics/slides/12-slam.pdf

[2] V.Barrile, G. Candela, A. Fotia, ‘Point cloud segmentation using image processing techniques for structural analysis’, The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Volume XLII-2/W11, 2019

[3] Łukasz Sobczak , Katarzyna Filus , Adam Domanski and Joanna Domanska, ‘LiDAR Point Cloud Generation for SLAM Algorithm Evaluation’, Sensors 2021, 21, 3313. https://doi.org/10.3390/ s21103313.

Find more about the thesis at this address:

https://cloud.cps.unileoben.ac.at/index.php/s/RybJssqpq68KDYF