B.Sc. Thesis of Mr. Maximilian Pettinger: “6D Object Pose Estimation Using Classical and Deep Learning Approaches”

Supervisors:

- Univ.-Prof. Dr Elmar Rückert

- Mr. Fotios Lygerakis

Topic

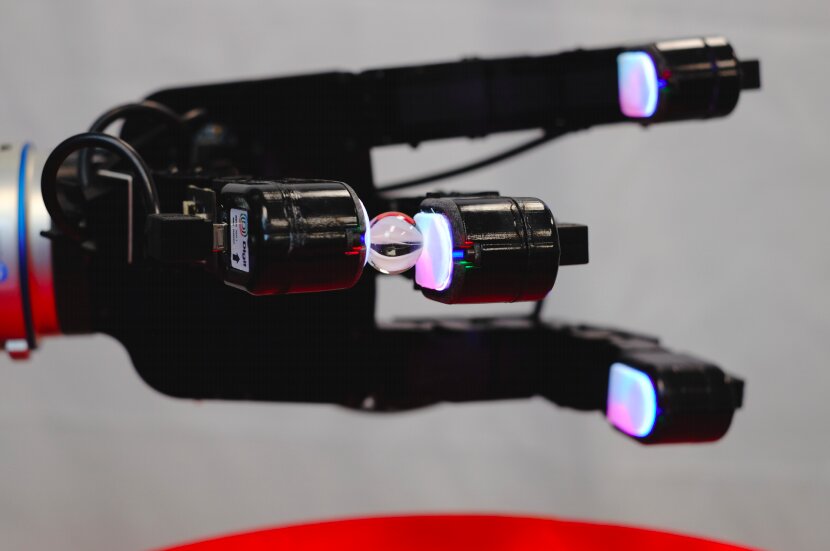

The estimation of 6D object pose plays a critical role in applications such as robotic

manipulation, augmented reality, and computer vision. This thesis will investigate two distinct

approaches to solving the problem of 6D object pose estimation: classical computer vision

techniques and modern deep learning methods. The classical approach will leverage OpenCV

and ArUco markers for pose estimation, emphasizing foundational knowledge and traditional

methodologies. Camera calibration and marker-based detection will be implemented and

tested under varying conditions to assess their robustness and accuracy.

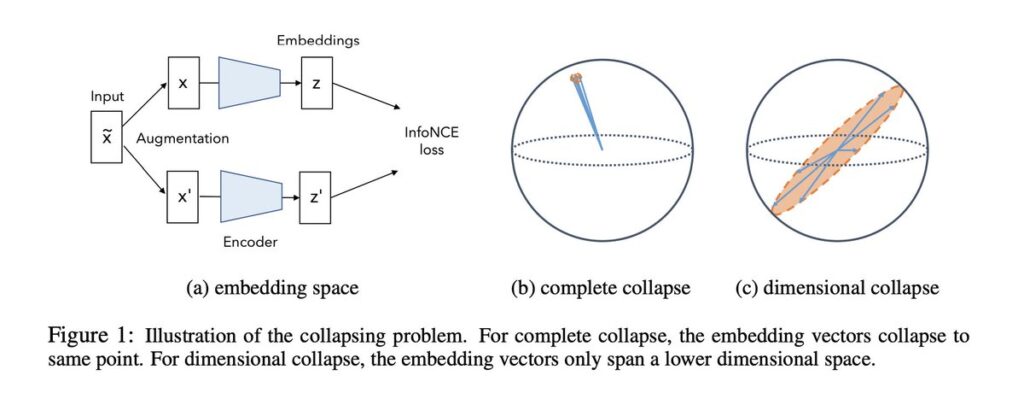

The second approach will explore state-of-the-art deep learning models tailored for

markerless 6D pose estimation. Following a comprehensive literature review, a state-of-theart neural network model will be selected, implemented, and trained or fine-tuned using

publicly available datasets, potentially supplemented by novel datasets collected during the

study. The study will evaluate the performance of the model in estimating the pose of

complex objects, comparing its results with the classical approach.

The final analysis highlights the strengths and limitations of both methodologies concerning

accuracy, computational complexity, and generalizability. This comparison aims to provide

insights into selecting suitable techniques for different practical scenarios and advance the

understanding of object pose estimation challenges.

Tasks

- Literature research

- Implementation of a classic 6D Object Pose Estimation

- Implementation of a state-of-the-art deep learning based 6D Object Pose Estimation

- Testing in practical applications

- Comparison of the two approaches

- Writing BSc thesis

References

- Implementation, testing and comparison of conventional and state-of-the-art computer

vison based 6D Pose Estimations - Establishing the basis for future research

Bachelor Thesis

The final bachelor thesis document can be downloaded here.