M.Sc. Thesis, Real-Time 3D Reconstruction and Rendering of Vision-Language Embeddings

Supervisor: Christian Rauch;

Univ.-Prof. Dr Elmar Rückert

Start date: April 2025

Theoretical difficulty: mid

Practical difficulty: low

Abstract

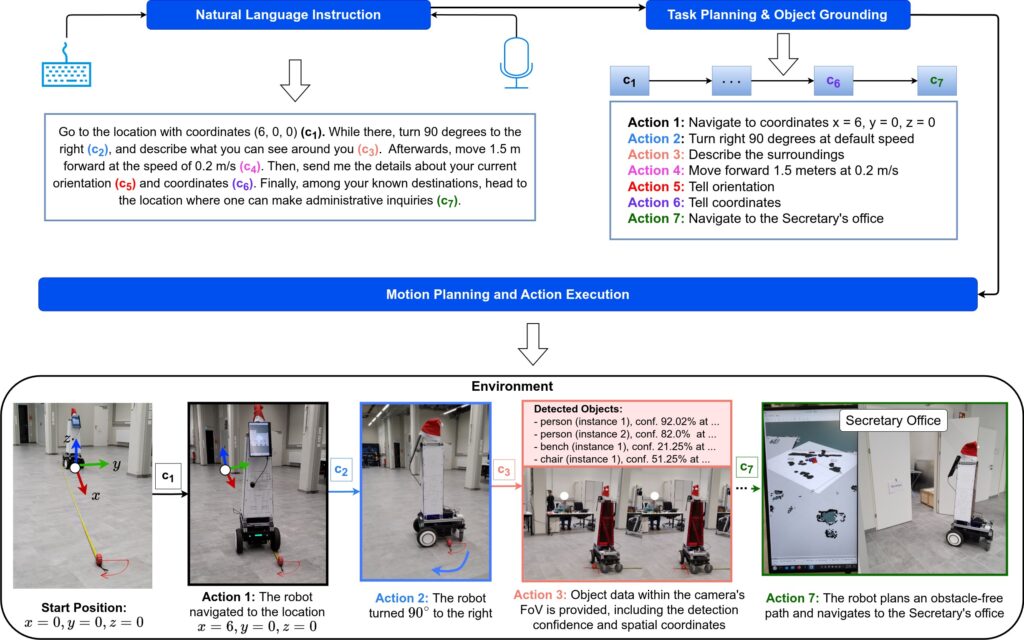

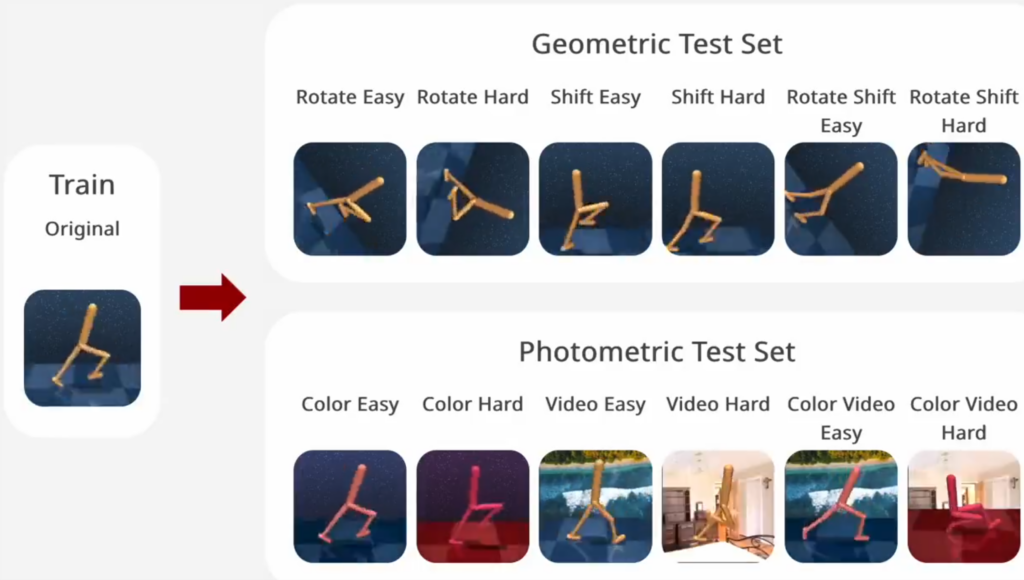

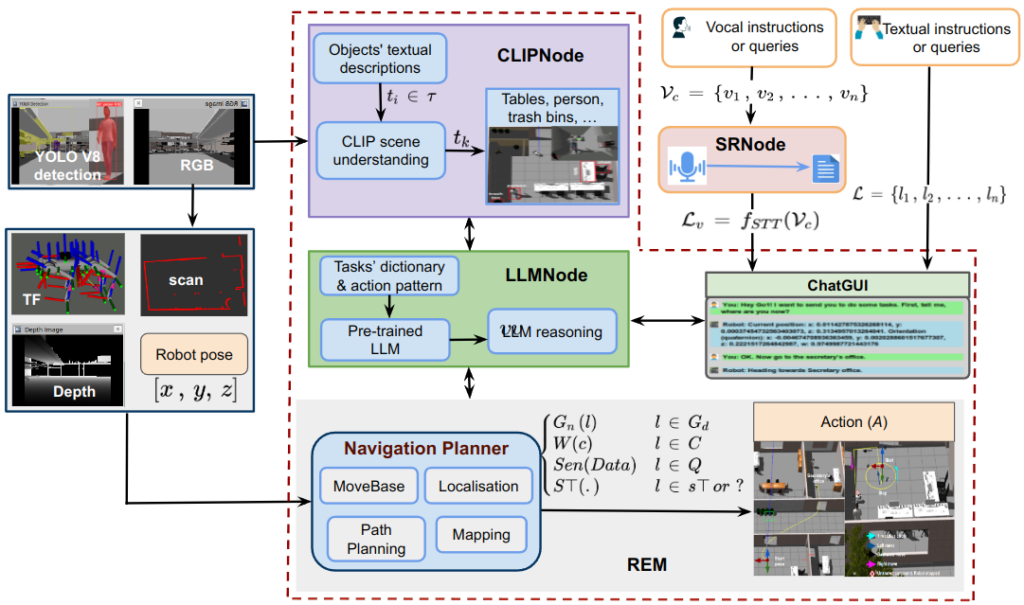

Vision-Language Models (VLMs) are novel methods that can related image information and text via a common embedding space. But these methods, trained on 2D image data, can only operate on the current view point of a camera.

On the other hand, neural rendering techniques, such as NeRF [1] or 3D Gaussian Splatting [2], enable compressing multiple image views into a highly detailed representation, which is useful for novel view synthesis in SLAM approaches.

Related work, such as LERF [3] and LangSplat [4] are combining these two approaches to create a compressed 3D representation of these vision-language embeddings, but are far from real-time capable, and usually do not provide a representation in metric space, making them unsuitable for robotic applications.

Tentative Work Plan

To achieve the objectives, the following concrete tasks will be focused on:

- Backgrounds and Setup:

- identify sensors for (RGB-D) data collection

- review related work in the domain of VLMs and neural rendering methods

- identify useful reference implementations

- Real-Time neural rendering approach:

- identify or implement a method for real-time localisation and mapping that can operate on a live sensor data stream (e.g. from a camera or ROS bag)

- Integrate Vision-Language embeddings into the map:

- based on related work, develop a method to integrate embeddings into the live reconstruction

- provide a way to introspect or query this representation via text in real-time

- PoC implementation:

- implement a ROS 2 wrapper around the proposed method that can operate on a live stream of colour and depth images from a robot-mounted camera

- collect evaluation sequences on a mobile robot platform (e.g. Uniguide) in varying environment, fallback alternative: use the camera in a hand-held setting

- Evaluation:

- evaluate the reconstruction of the embeddings with state-of-the-art approaches

- evaluate the real-time capabilities of the method against baseline approaches

References

[1] Ben Mildenhall, Pratul P. Srinivasan, Matthew Tancik, Jonathan T. Barron, Ravi Ramamoorthi, and Ren Ng. 2021. NeRF: representing scenes as neural radiance fields for view synthesis. Commun. ACM 65, 1 (January 2022), 99–106. https://doi.org/10.1145/3503250

[2] Bernhard Kerbl, Georgios Kopanas, Thomas Leimkuehler, and George Drettakis. 2023. 3D Gaussian Splatting for Real-Time Radiance Field Rendering. ACM Trans. Graph. 42, 4, Article 139 (August 2023), 14 pages. https://doi.org/10.1145/3592433

[3] J. Kerr, C. M. Kim, K. Goldberg, A. Kanazawa, and M. Tancik, “Lerf: Language embedded radiance fields,” in Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), October 2023, pp. 19 729–19 739.

[4] M. Qin, W. Li, J. Zhou, H. Wang, and H. Pfister, “Langsplat: 3d language gaussian splatting,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2024, pp. 20 051–20 060.