2025

|

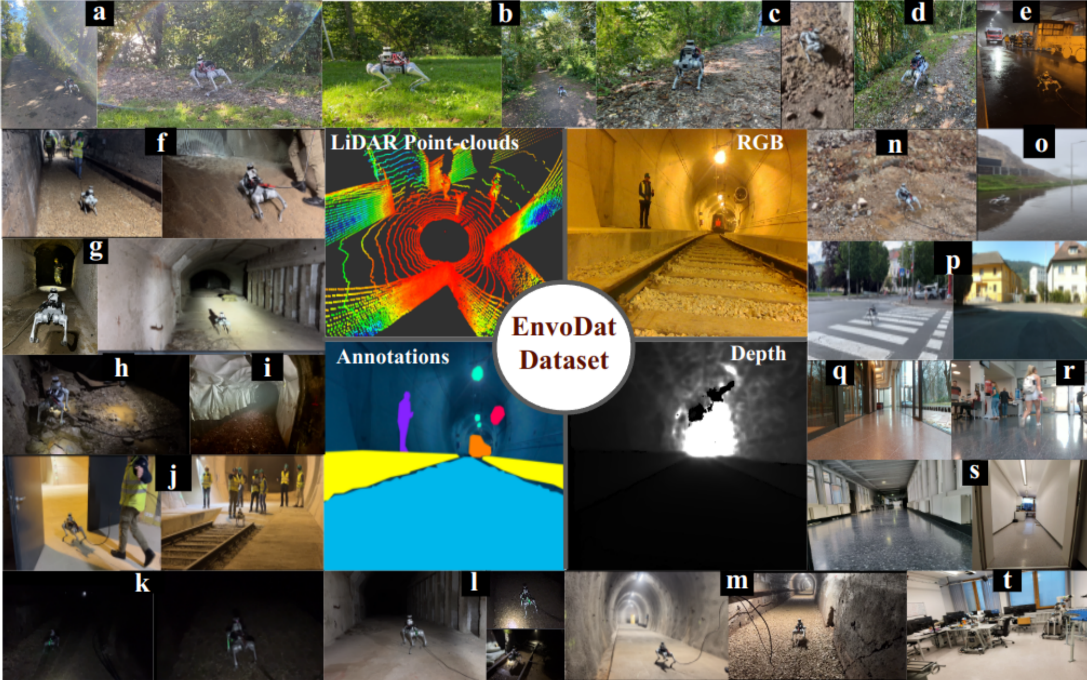

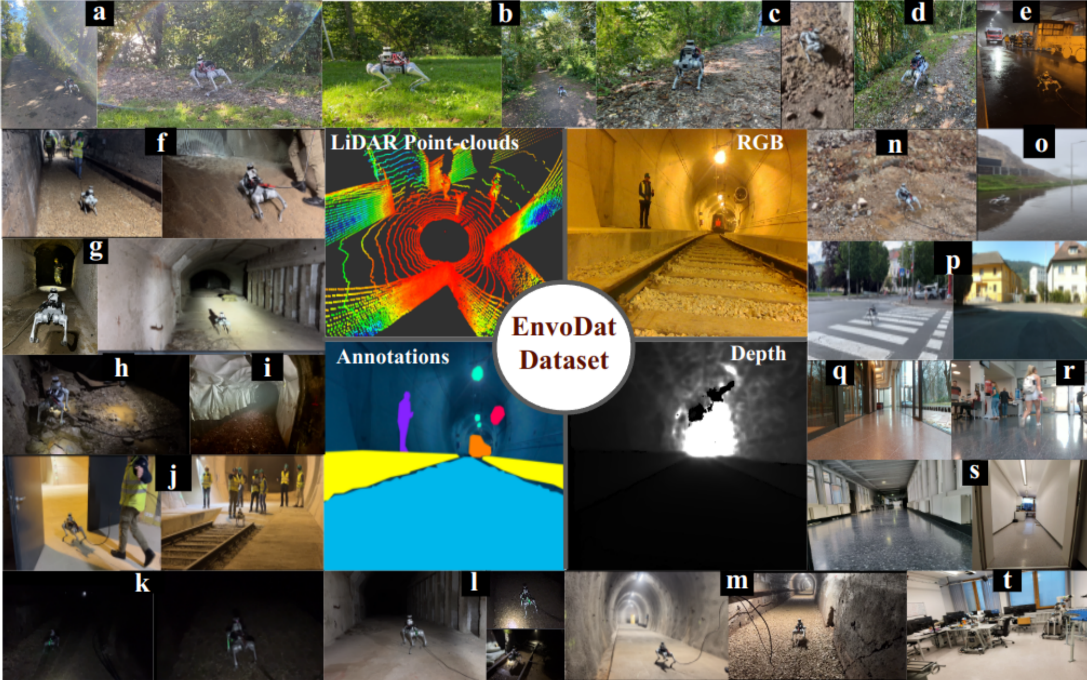

Nwankwo, Linus; Ellensohn, Bjoern; Dave, Vedant; Hofer, Peter; Forstner, Jan; Villneuve, Marlene; Galler, Robert; Rueckert, Elmar EnvoDat: A Large-Scale Multisensory Dataset for Robotic Spatial Awareness and Semantic Reasoning in Heterogeneous Environments Proceedings Article In: IEEE International Conference on Robotics and Automation (ICRA 2025)., 2025. @inproceedings{Nwankwo2025,

title = {EnvoDat: A Large-Scale Multisensory Dataset for Robotic Spatial Awareness and Semantic Reasoning in Heterogeneous Environments},

author = {Linus Nwankwo and Bjoern Ellensohn and Vedant Dave and Peter Hofer and Jan Forstner and Marlene Villneuve and Robert Galler and Elmar Rueckert},

url = {https://cloud.cps.unileoben.ac.at/index.php/s/MawgtYSbTBoNBZo},

year = {2025},

date = {2025-01-27},

urldate = {2025-01-27},

booktitle = {IEEE International Conference on Robotics and Automation (ICRA 2025).},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

|  |

2024

|

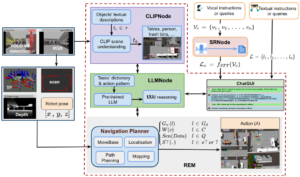

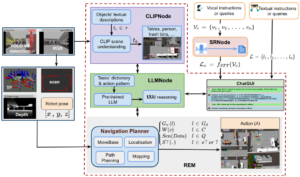

Nwankwo, Linus; Rueckert, Elmar Multimodal Human-Autonomous Agents Interaction Using Pre-Trained Language and Visual Foundation Models Conference In Workshop of the 2024 ACM/IEEE International Conference on HumanRobot Interaction (HRI ’24 Workshop), March 11–14, 2024, Boulder, CO, USA.

ACM, New York, NY, USA, 2024. @conference{Nwankwo2024MultimodalHA,

title = {Multimodal Human-Autonomous Agents Interaction Using Pre-Trained Language and Visual Foundation Models},

author = {Linus Nwankwo and Elmar Rueckert},

url = {https://human-llm-interaction.github.io/workshop/hri24/papers/hllmi24_paper_5.pdf},

year = {2024},

date = {2024-03-11},

urldate = {2024-03-11},

booktitle = { In Workshop of the 2024 ACM/IEEE International Conference on HumanRobot Interaction (HRI ’24 Workshop), March 11–14, 2024, Boulder, CO, USA.

ACM, New York, NY, USA},

abstract = {In this paper, we extended the method proposed in [17] to enable humans to interact naturally with autonomous agents through vocal and textual conversations. Our extended method exploits the inherent capabilities of pre-trained large language models (LLMs), multimodal visual language models (VLMs), and speech recognition (SR) models to decode the high-level natural language conversations and semantic understanding of the robot's task environment, and abstract them to the robot's actionable commands or queries. We performed a quantitative evaluation of our framework's natural vocal conversation understanding with participants from different racial backgrounds and English language accents. The participants interacted with the robot using both vocal and textual instructional commands. Based on the logged interaction data, our framework achieved 87.55% vocal commands decoding accuracy, 86.27% commands execution success, and an average latency of 0.89 seconds from receiving the participants' vocal chat commands to initiating the robot’s actual physical action. The video demonstrations of this paper can be found at https://linusnep.github.io/MTCC-IRoNL/},

keywords = {},

pubstate = {published},

tppubtype = {conference}

}

In this paper, we extended the method proposed in [17] to enable humans to interact naturally with autonomous agents through vocal and textual conversations. Our extended method exploits the inherent capabilities of pre-trained large language models (LLMs), multimodal visual language models (VLMs), and speech recognition (SR) models to decode the high-level natural language conversations and semantic understanding of the robot's task environment, and abstract them to the robot's actionable commands or queries. We performed a quantitative evaluation of our framework's natural vocal conversation understanding with participants from different racial backgrounds and English language accents. The participants interacted with the robot using both vocal and textual instructional commands. Based on the logged interaction data, our framework achieved 87.55% vocal commands decoding accuracy, 86.27% commands execution success, and an average latency of 0.89 seconds from receiving the participants' vocal chat commands to initiating the robot’s actual physical action. The video demonstrations of this paper can be found at https://linusnep.github.io/MTCC-IRoNL/ |  |

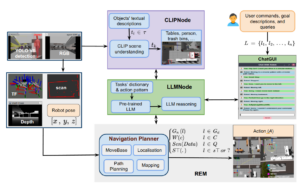

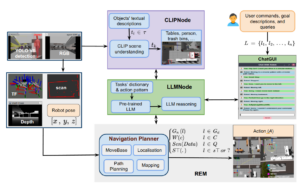

Nwankwo, Linus; Rueckert, Elmar The Conversation is the Command: Interacting with Real-World Autonomous Robots Through Natural Language Proceedings Article In: HRI '24: Companion of the 2024 ACM/IEEE International Conference on Human-Robot Interaction., pp. 808–812, ACM/IEEE Association for Computing Machinery, New York, NY, USA, 2024, ISBN: 9798400703232, (Published as late breaking results. Supplementary video: https://cloud.cps.unileoben.ac.at/index.php/s/fRE9XMosWDtJ339 ). @inproceedings{Nwankwo2024,

title = {The Conversation is the Command: Interacting with Real-World Autonomous Robots Through Natural Language},

author = {Linus Nwankwo and Elmar Rueckert},

url = {https://doi.org/10.1145/3610978.3640723

https://cloud.cps.unileoben.ac.at/index.php/s/YzJdHWDt9ZdqsZs},

doi = {10.1145/3610978.3640723},

isbn = {9798400703232},

year = {2024},

date = {2024-01-16},

urldate = {2024-01-16},

booktitle = {HRI '24: Companion of the 2024 ACM/IEEE International Conference on Human-Robot Interaction.},

pages = {808–812},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

organization = {ACM/IEEE},

series = {HRI '24},

abstract = {In recent years, autonomous agents have surged in real-world environments such as our homes, offices, and public spaces. However, natural human-robot interaction remains a key challenge. In this paper, we introduce an approach that synergistically exploits the capabilities of large language models (LLMs) and multimodal vision-language models (VLMs) to enable humans to interact naturally with autonomous robots through conversational dialogue. We leveraged the LLMs to decode the high-level natural language instructions from humans and abstract them into precise robot actionable commands or queries. Further, we utilised the VLMs to provide a visual and semantic understanding of the robot's task environment. Our results with 99.13% command recognition accuracy and 97.96% commands execution success show that our approach can enhance human-robot interaction in real-world applications. The video demonstrations of this paper can be found at https://osf.io/wzyf6 and the code is available at our GitHub repository.},

howpublished = {ACM/IEEE International Conference on Human-Robot Interaction (HRI ’24 Companion)},

key = {ChatGPT, LLMs, ROS, VLMs, autonomous robots, human-robot interaction, natural language interaction},

note = {Published as late breaking results. Supplementary video: https://cloud.cps.unileoben.ac.at/index.php/s/fRE9XMosWDtJ339 },

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

In recent years, autonomous agents have surged in real-world environments such as our homes, offices, and public spaces. However, natural human-robot interaction remains a key challenge. In this paper, we introduce an approach that synergistically exploits the capabilities of large language models (LLMs) and multimodal vision-language models (VLMs) to enable humans to interact naturally with autonomous robots through conversational dialogue. We leveraged the LLMs to decode the high-level natural language instructions from humans and abstract them into precise robot actionable commands or queries. Further, we utilised the VLMs to provide a visual and semantic understanding of the robot's task environment. Our results with 99.13% command recognition accuracy and 97.96% commands execution success show that our approach can enhance human-robot interaction in real-world applications. The video demonstrations of this paper can be found at https://osf.io/wzyf6 and the code is available at our GitHub repository. |  |

2023

|

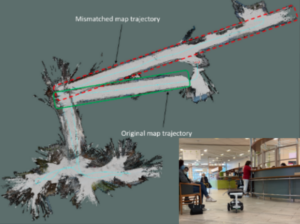

Nwankwo, Linus; Rueckert, Elmar Understanding why SLAM algorithms fail in modern indoor environments Proceedings Article In: International Conference on Robotics in Alpe-Adria-Danube Region (RAAD). , pp. 186 - 194, Cham: Springer Nature Switzerland., 2023. @inproceedings{Nwankwo2023,

title = {Understanding why SLAM algorithms fail in modern indoor environments},

author = {Linus Nwankwo and Elmar Rueckert},

url = {https://cloud.cps.unileoben.ac.at/index.php/s/KdZ2E2np5QEnYfL

},

doi = {https://doi.org/10.1007/978-3-031-32606-6_22},

year = {2023},

date = {2023-05-27},

urldate = {2023-05-27},

booktitle = {International Conference on Robotics in Alpe-Adria-Danube Region (RAAD). },

volume = {135},

pages = {186 - 194},

publisher = {Cham: Springer Nature Switzerland.},

series = {Mechanisms and Machine Science},

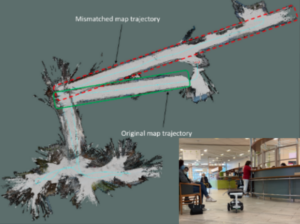

abstract = {Simultaneous localization and mapping (SLAM) algorithms are essential for the autonomous navigation of mobile robots. With the increasing demand for autonomous systems, it is crucial to evaluate and compare the performance of these algorithms in real-world environments.

In this paper, we provide an evaluation strategy and real-world datasets to test and evaluate SLAM algorithms in complex and challenging indoor environments. Further, we analysed state-of-the-art (SOTA) SLAM algorithms based on various metrics such as absolute trajectory error, scale drift, and map accuracy and consistency. Our results demonstrate that SOTA SLAM algorithms often fail in challenging environments, with dynamic objects, transparent and reflecting surfaces. We also found that successful loop closures had a significant impact on the algorithm’s performance. These findings highlight the need for further research to improve the robustness of the algorithms in real-world scenarios. },

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Simultaneous localization and mapping (SLAM) algorithms are essential for the autonomous navigation of mobile robots. With the increasing demand for autonomous systems, it is crucial to evaluate and compare the performance of these algorithms in real-world environments.

In this paper, we provide an evaluation strategy and real-world datasets to test and evaluate SLAM algorithms in complex and challenging indoor environments. Further, we analysed state-of-the-art (SOTA) SLAM algorithms based on various metrics such as absolute trajectory error, scale drift, and map accuracy and consistency. Our results demonstrate that SOTA SLAM algorithms often fail in challenging environments, with dynamic objects, transparent and reflecting surfaces. We also found that successful loop closures had a significant impact on the algorithm’s performance. These findings highlight the need for further research to improve the robustness of the algorithms in real-world scenarios. |  |

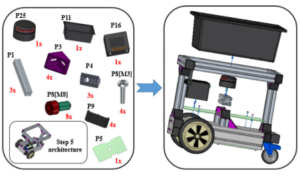

Nwankwo, Linus; Fritze, Clemens; Bartsch, Konrad; Rueckert, Elmar ROMR: A ROS-based Open-source Mobile Robot Journal Article In: HardwareX, vol. 15, pp. 1–29, 2023. @article{Nwankwo2023b,

title = {ROMR: A ROS-based Open-source Mobile Robot},

author = {Linus Nwankwo and Clemens Fritze and Konrad Bartsch and Elmar Rueckert},

url = {https://cloud.cps.unileoben.ac.at/index.php/s/8aXLXXPFAZ4wq54},

doi = {10.1016/j.ohx.2023.e00426},

year = {2023},

date = {2023-04-17},

urldate = {2023-04-17},

journal = {HardwareX},

volume = {15},

pages = {1--29},

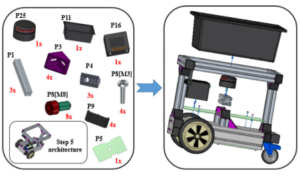

abstract = {Currently, commercially available intelligent transport robots that are capable of carrying up to 90kg of load can cost $5,000 or even more. This makes real-world experimentation prohibitively expensive, and limiting the applicability of such systems to everyday home or industrial tasks. Aside from their high cost, the majority of commercially available platforms are either closed-source, platform-specific, or use difficult-to-customize hardware and firmware. In this work, we present a low-cost, open-source and modular alternative, referred to herein as ”ROS-based open-source mobile robot (ROMR)”. ROMR utilizes off-the-shelf (OTS) components, additive manufacturing technologies, aluminium profiles, and a consumer hoverboard with high-torque brushless direct current (BLDC) motors. ROMR is fully compatible with the robot operating system (ROS), has a maximum payload of 90kg, and costs less than $1500. Furthermore, ROMR offers a simple yet robust framework for contextualizing simultaneous localization and mapping (SLAM) algorithms, an essential prerequisite for autonomous robot navigation. The robustness and performance of the ROMR were validated through real�world and simulation experiments. All the design, construction and software files are freely available online under the GNU GPL v3 license at https://doi.org/10.17605/OSF.IO/K83X7. A descriptive video of ROMR can be found at https://osf.io/ku8ag.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Currently, commercially available intelligent transport robots that are capable of carrying up to 90kg of load can cost $5,000 or even more. This makes real-world experimentation prohibitively expensive, and limiting the applicability of such systems to everyday home or industrial tasks. Aside from their high cost, the majority of commercially available platforms are either closed-source, platform-specific, or use difficult-to-customize hardware and firmware. In this work, we present a low-cost, open-source and modular alternative, referred to herein as ”ROS-based open-source mobile robot (ROMR)”. ROMR utilizes off-the-shelf (OTS) components, additive manufacturing technologies, aluminium profiles, and a consumer hoverboard with high-torque brushless direct current (BLDC) motors. ROMR is fully compatible with the robot operating system (ROS), has a maximum payload of 90kg, and costs less than $1500. Furthermore, ROMR offers a simple yet robust framework for contextualizing simultaneous localization and mapping (SLAM) algorithms, an essential prerequisite for autonomous robot navigation. The robustness and performance of the ROMR were validated through real�world and simulation experiments. All the design, construction and software files are freely available online under the GNU GPL v3 license at https://doi.org/10.17605/OSF.IO/K83X7. A descriptive video of ROMR can be found at https://osf.io/ku8ag. |  |