BSc. Thesis, Merisa Salkic – Smart conversations: Enhancing robotic task execution through advanced language models

Supervisor: Linus Nwankwo, M.Sc.;

Univ.-Prof. Dr Elmar Rückert

Start date: As soon as possible

Theoretical difficulty: mid

Practical difficulty: High

Abstract

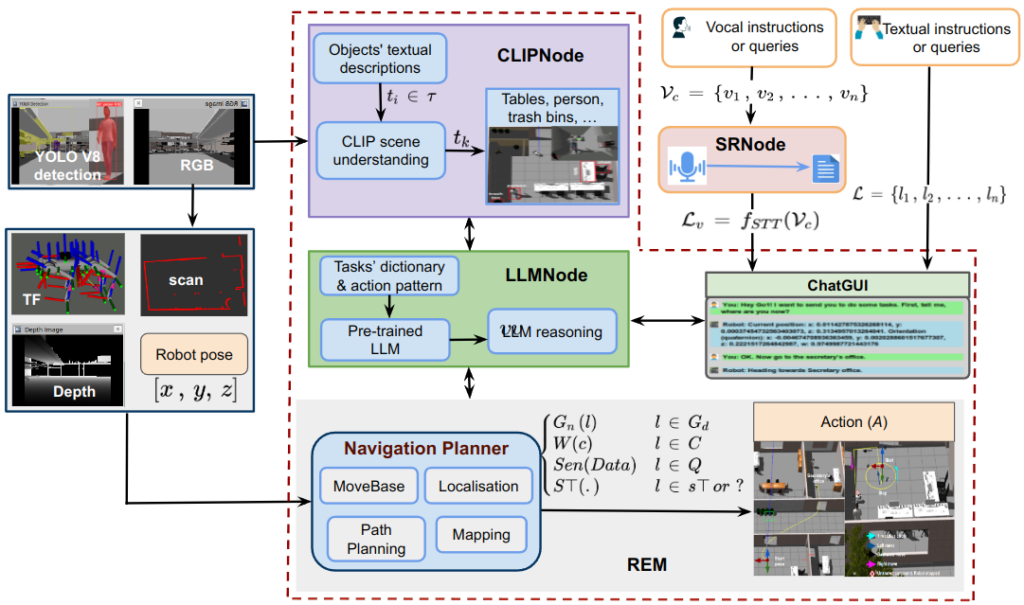

In this thesis, we aim to enhance the method proposed in [1] for robust natural human-autonomous agent interaction through verbal and textual conversations.

The primary focus would be to develop a system that can enhance the natural language conversations, understand the

semantic context of the robot’s task environment, and abstract this information into actionable commands or queries. This will be achieved by leveraging the capabilities of pre-trained large language models (LLMs) – GPT-4, visual language models (VLMs) – CLIP, and audio language models (ALMs) – AudioLM.

Tentative Work Plan

To achieve the objectives, the following concrete tasks will be focused on:

- Initialisation and Background:

- Study the concept of LLMs, VLMs, and ALMs.

- How LLMs, VLMs, and ALMs can be grounded for autonomous robotic tasks.

- Familiarise yourself with the methods at the project website – https://linusnep.github.io/MTCC-IRoNL/.

- Setup and Familiarity with the Simulation Environment

- Build a robot model (URDF) for the simulation (optional if you wish to use the existing one).

- Set up the ROS framework for the simulation (Gazebo, Rviz).

- Recommended programming tools: C++, Python, Matlab.

- Coding

- Improve the existing code of the method proposed in [1] to incorporate the aforementioned modalities—the code to be provided to the student.

- Integrate other LLMs e.g., LLaMA and VLMs e.g., GLIP modalities into the framework and compare their performance with the baseline (GPT-4 and CLIP).

- Intermediate Presentation:

- Present the results of your background study or what you must have done so far.

- Detailed planning of the next steps.

- Simulation & Real-World Testing (If Possible):

- Test your implemented model with a Gazebo-simulated quadruped or differential drive robot.

- Perform the real-world testing of the developed framework with our Unitree Go1 quadruped robot or with our Segway RMP 220 Lite robot.

- Analyse and compare the model’s performance in real-world scenarios versus simulations with the different LLMs and VLMs pipelines.

- Optimize the Framework for Optimal Performance and Efficiency (Optional):

- Validate the model to identify bottlenecks within the robot’s task environment.

- Documentation and Thesis Writing:

- Document the entire process, methodologies, and tools used.

- Analyse and interpret the results.

- Draft the project report or thesis, ensuring that the primary objectives are achieved.

- Research Paper Writing (optional)

Related Work

[1] Linus Nwankwo and Elmar Rueckert. 2024. The Conversation is the Command: Interacting with Real-World Autonomous Robots Through Natural Language. In Companion of the 2024 ACM/IEEE International Conference on Human-Robot Interaction (HRI ’24). Association for Computing Machinery, New York, NY, USA, 808–812. https://doi.org/10.1145/3610978.3640723.

[2] Nwankwo, L., & Rueckert, E. (2024). Multimodal Human-Autonomous Agents Interaction Using Pre-Trained Language and Visual Foundation Models. arXiv preprint arXiv:2403.12273.