The Chair of Cyber-Physical Systems at Montanuniversität Leoben is offering a fully funded PhD position (100% employment) starting as soon as possible.

• Employment Type: Full-time doctoral student (40 hours/week)

• Salary: €3,714.80/month (14 times per year), Salary Group B1 according to Uni-KV

• Duration: The position includes the opportunity to complete a PhD

About the Position

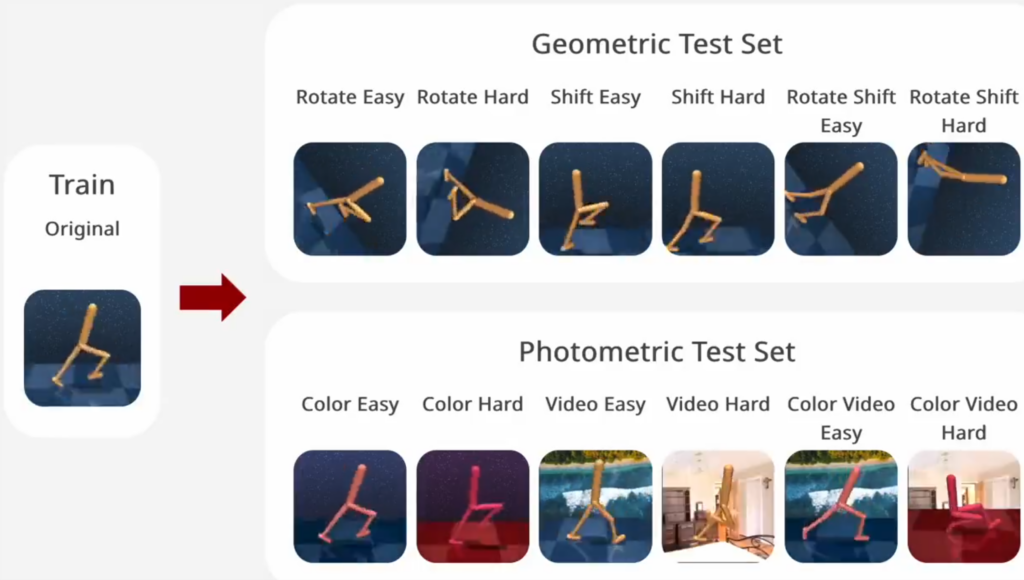

We are at the forefront of developing cutting-edge machine learning algorithms for detecting, tracking, and classifying material flows using various advanced sensing technologies, including:

• RGB cameras

• 3D imaging

• LiDAR

• Hyperspectral cameras

• RAMAN devices

• Tactile sensors

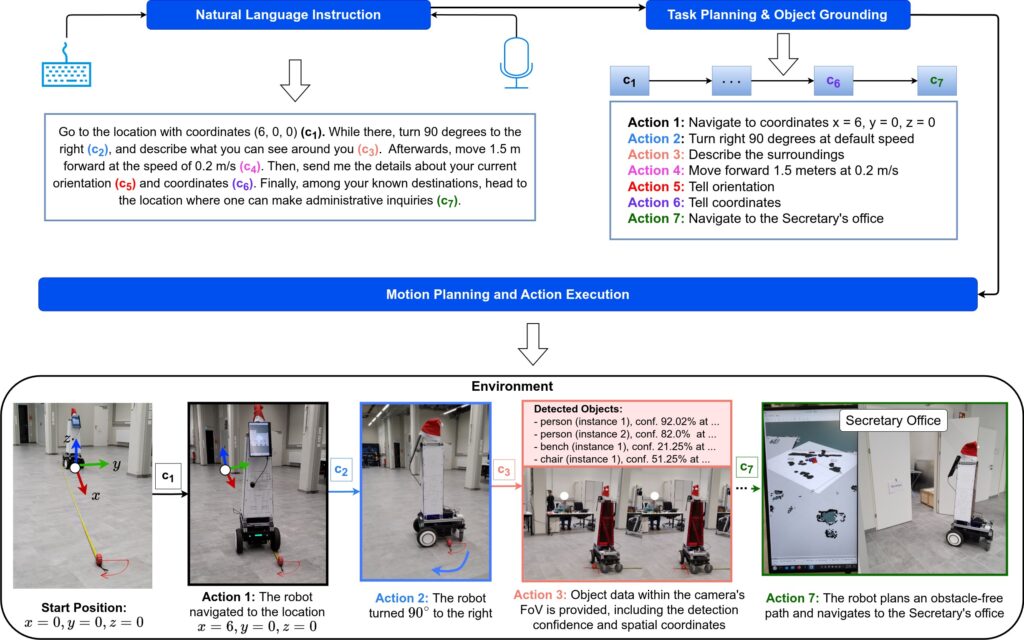

The resulting model predictions are used for automated data labeling, real-time process monitoring, and autonomous object manipulation.

This PhD research will focus on multiple aspects of these topics, with a special emphasis on multimodal sensing and robotic grasping. The goal is to enhance robotic perception and interaction by integrating machine learning with tactile sensing technologies.

What we offer

•A dynamic and collaborative research environment in artificial intelligence and robotics

•The opportunity to develop your own research ideas and work on cutting-edge projects

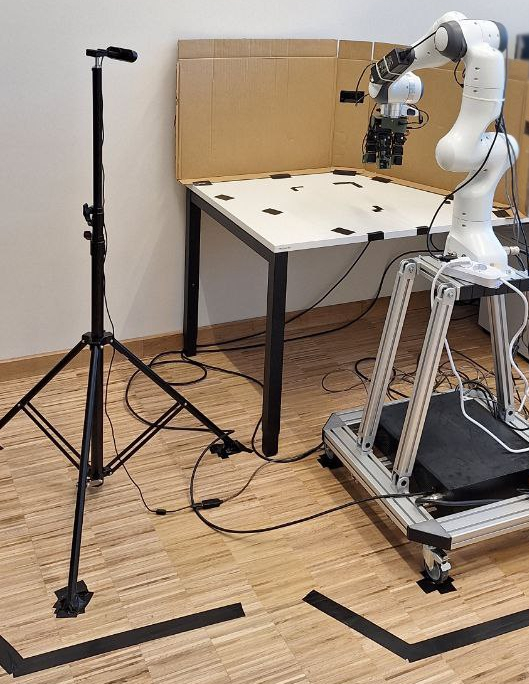

• Access to state-of-the-art lab facilities

•International research collaborations and conference travel opportunities

•Targeted career guidance for a successful academic and research career

Plus a great lab space shown in this image.

Requirements

• Master’s degree in Computer Science, Physics, Telematics, Statistics, Mathematics, Electrical Engineering, Mechanics, Robotics, or a related field

• Strong motivation for scientific research and publications

• Ability to work independently and collaboratively in an interdisciplinary team

• Interest in writing a PhD dissertation

Desired additional qualifications

• Programming experience in C, C++, C#, Java, MATLAB, Python, or a similar language

• Familiarity with AI libraries and frameworks (e.g., TensorFlow, PyTorch)

• Strong English communication skills (written and spoken)

• Willingness to travel for research collaborations and technical presentations

Application & Materials

A complete application includes:

1. Curriculum Vitae (CV) (detailed)

2. Letter of Motivation

3. Master’s Thesis (PDF or link)

4. Academic Certificates (Bachelor’s and Master’s degrees)

Optional but beneficial:

5. Letter(s) of Recommendation

6. Contact Information for References (name, email, phone)

7. Previous Publications (PDFs or links)

Application deadline: Open until the position is filled.

Online Application via Email: Please send your application files to rueckert@unileoben.ac.at

The Montanuniversität Leoben intends to increase the number of women on its faculty and therefore specifically invites applications by women. Among equally qualified applicants women will receive preferential consideration.