Meeting Notes February 2023

Meeting 02/02

Research

- Follow up CR-VAE

- Files on the papers folder

- Create simple code to run experiments as described on paper

- Upload on gitea

- Create a webpage for CR-VAE paper

- Wait for reviews (March 13)

- Rebuttal (March 19)

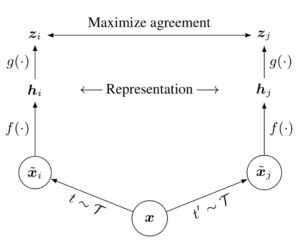

- Extend the representation learning work towards disentanglement

- Literature Review

- Dig deeper into Transformers

- Literature Review on SOTA RL algorithms

- Read and implement basic and SOTA RL algorithms

- Can be the base of an RL course too.

- Read and implement basic and SOTA RL algorithms

- Use CR-VAE with SOTA RL algorithms

- First experiments with SAC

- Explore sample efficiency

- Explore gradient flow ablations

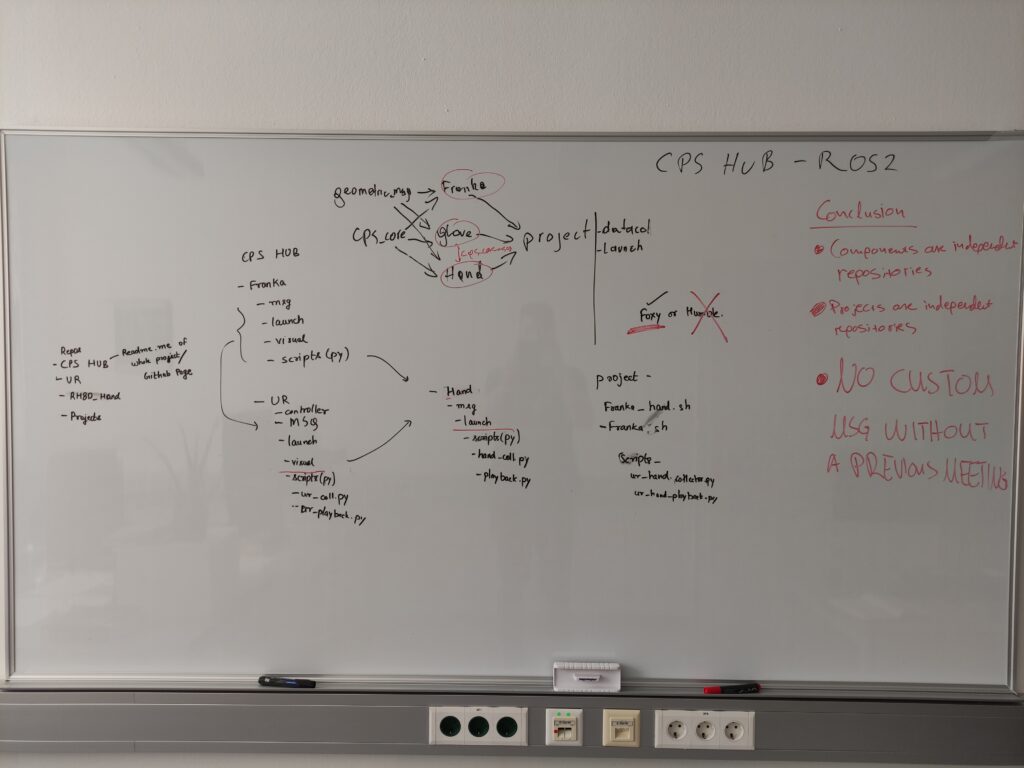

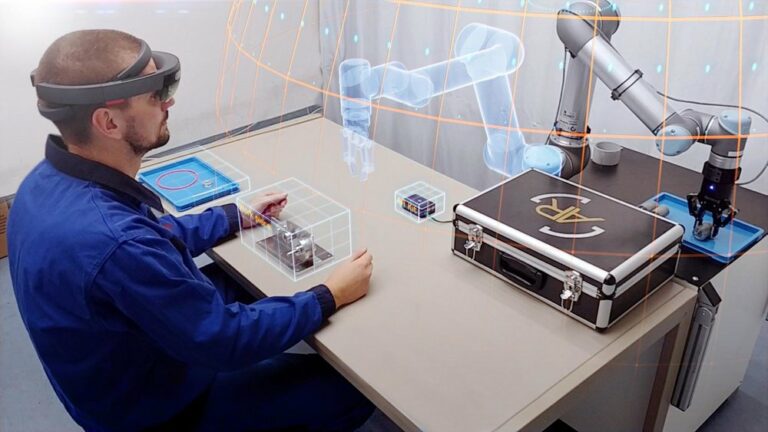

- Develop an AR-ROS2 framework

- Create a minimal working example of manipulating a physical robot (UR3) with Hololens2

M.Sc. Students/Interns

- Melanie

- Thesis Review

- Code submission

- Sign Language project

- Define the project more clearly

- Feedback needed

- Send study details to the applicant

- Define the project more clearly

- AR project

- Is it within the scope of our research?

ML Assistantship

- Syllabus

- Prepare exercises

Miscellaneous

- Ph.D. registration

- Mentor

- Ortner Ronald?

- Other UNI?

- Mentor

- Retreats

- expectations/requirements

- Summer School

- Neural Coffee (ML Reading Group)

- When: Every Friday 10:00-12:00

- Where: CPS Kitchen (?)

- Poster

- Floor and Desk Lamps

Meeting 16/02

Research

- create a new research draft

- implement CURL

- substitute contrastive learning with CR-VAE representations

- Literature review on unsupervised learning (Hinton’s work) to find out ankles that have room for improvement

- write a journal on that

Summer School

- Cv & motivation letter feedback

- Applied

M.Sc. Students/Interns

- Melanie: thesis review done

- Iye Szin:

- Gave her resources to study (ML/NN/ROS2)

- Discussed a plan for internship

Ph.D. registration

- PhD in Computer Science

- Not possible

- probably doesn’t matter(?)

- Call with Dean of Studies

- Mentor

- I would like someone exposed to sample-efficient and robust Reinforcement Learning. Hopefully to Robot Learning too

- Someone that can also extend my scientific network of people

- Can I ask professors from other universities?

- Mentor Candidates

- Marc Toussaint, Learning and Intelligent Systems lab, TU Berlin, Germany

- Abhinav Valada, Robot Learning Lab, University of Freiburg, Germany

- Georgia Chalvatzaki, IAS, TU Darmstadt, Germany

- Edward Johns, Robot Learning Lab, Imperial College London, UK

- Sepp Hochreiter, Institute of Machine Learning, JKU Linz, Austria

- Write a paper with a mentor

ML Course

- Jupyter notebooks or old code? If Jyputer notebooks, why not google collab?

- What will the context of lectures be so that I can prepare exercises accordingly?

- lectures are up

- 20% of the final exam is from the lab exercises

- Decide on the lecture format

- Find an appropriate dataset

Miscellaneous

Science Breakfast @MUL: 14/02 11:00-12:00

Anymal Robot at Mining chair on 15/02?

Effective Communication In Academia Seminar

- Feedback on CPS presentation template:

- Size: Make the slide size the same as PowerPoint (more rectangular).

- Outline (left outline)

- We could skip the subsections. Keep only the higher sections

- Make the fonts darker. They are not easily visible on a projector

- Colors

- Color of boxes (frames) must become darker, otherwise it is not easily distinguishable from the white background on a projector

- Idea: Create a Google Slide template

- Easier to use

- Can add arrows, circles, etc

- Easier with tables

Meeting 28/02

Research

- air-hokey challenge

M.Sc. Students/Interns

- Iye Szin:

- starts 2 March

- Elmar has to sign documents (permanent position)

- Allocation of HW

- transpornder

Ph.D. registration

- Mentor can be from anywhere

- Mentor has to be a recognized scientist (with a “venia docendi” if he/she is from the German-speaking world)

- No courses or ects needed

- the mentor must not be a reviewer of your thesis. He can be an examiner, though.

- Email to Marc Toussaint?

- Officially: no obligations

- Unofficially: propose common reasearch

ML Course

- Google Collab

- Uses the jupyter format.

- Runs online

- Even supports limited access to GPU/TPU

- Speeds up learning process

- Do we need latex?

- yes

- Update slides for the Lab accordingly

- Submission at a folder in the cloud

- ipynb file

- report

- zipped and named : firstname_lastname_m00000_assignment1.zip

- Online lectures -> webex more stable

- Google slides template

- Grading

- 100 pts

- latex report: +10

- optional exercise: +20

- tweetback: 3 questions

Miscellaneous

-

- IAS retreat

- Melanie’s presentation