Supervisor: Linus Nwankwo, M.Sc.;

Univ.-Prof. Dr Elmar Rückert

Start date: ASAP from October 2021

Theoretical difficulty: low

Practical difficulty: mid

Abstract

Nowadays, robots used for survey of indoor and outdoor environments are either teleoperated in fully autonomous mode where the robot makes a decision by itself and have complete control of its actions; semi-autonomous mode where the robot’s decisions and actions are both manually (by a human) and autonomously (by the robot) controlled; and in full manual mode where the robot actions and decisions are manually controlled by humans. In full manual mode, the robot can be operated using a teach pendant, computer keyboard, joystick, mobile device, etc.

Recently, the Robot Operating System (ROS) has provided roboticists easy and efficient tools to visualize and debug robot data; teleoperate or control robots with both hardware and software compatibility on the ROS framework. Unfortunately, the Lego Mindstorms EV3 is not yet strongly supported on the ROS platform since the ROS master is too heavy on the EV3 RAM [2]. This limits our chances of exploring the full possibilities of the bricks.

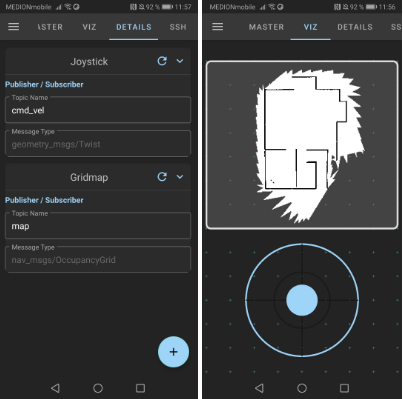

However, in the context of this project, we aim to get ROS to run on the EV3 Mindstorms to enable us to teleoperate or control it on the ROS platform using a mobile device and leveraging on the framework developed by [1].

Tentative Work Plan

To achieve our aim, the following concrete tasks will be focused on:

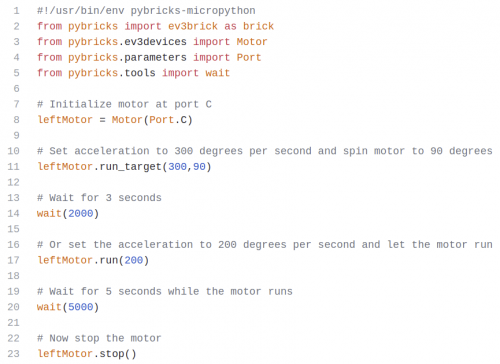

- Configure and run ROS on the Lego EV3 Mindstorms

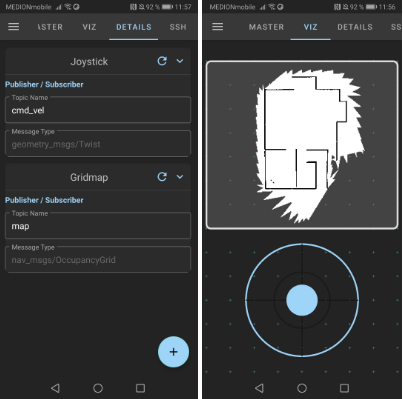

- Set up a network connection between the ROS-Mobile device and the EV3 robot

- Teleoperate the EV3 robot on the ROS-Mobile platform

- Perform Simultaneous Localization and Mapping (SLAM) for indoor applications with the EV3 robot

References

[1] Nils Rottmann et al, https://github.com/ROS-Mobile/ROS-Mobile-Android, 2020

[2] ROS.org, http://wiki.ros.org/Robots/EV3