Supervisor: Linus Nwankwo, M.S.c;

Univ.-Prof. Dr Elmar Rückert

Start date: ASAP, e.g., 1st of October 2021

Theoretical difficulty: low

Practical difficulty: high

Introduction

The SLAM problem as described in [3] is the problem of building a map of the environment while simultaneously estimating the robot’s position relative to the map given noisy sensor observations. Probabilistically, the problem is often approached by leveraging the Bayes formulation due to the uncertainties in the robot’s motions and observations.

SLAM has found many applications not only in navigation, augmented reality, and autonomous vehicles e.g. self-driving cars, and drones but also in indoor & outdoor delivery robots, intelligent warehousing etc. While many possible solutions have been presented in the literature to solve the SLAM problem, in challenging real-world scenarios with features or geometrically constrained characteristics, the reality is far different.

Some of the most common challenges with SLAM are the accumulation of errors over time due to inaccurate pose estimation (localization errors) while the robot moves from the start location to the goal location; the high computational cost for image, point cloud processing and optimization [1]. These challenges can cause a significant deviation from the actual values and at the same time leads to inaccurate localization if the image and cloud processing is not processed at a very high frequency [2]. This would also impair the frequency with which the map is updated and hence the overall efficiency of the SLAM algorithm will be affected.

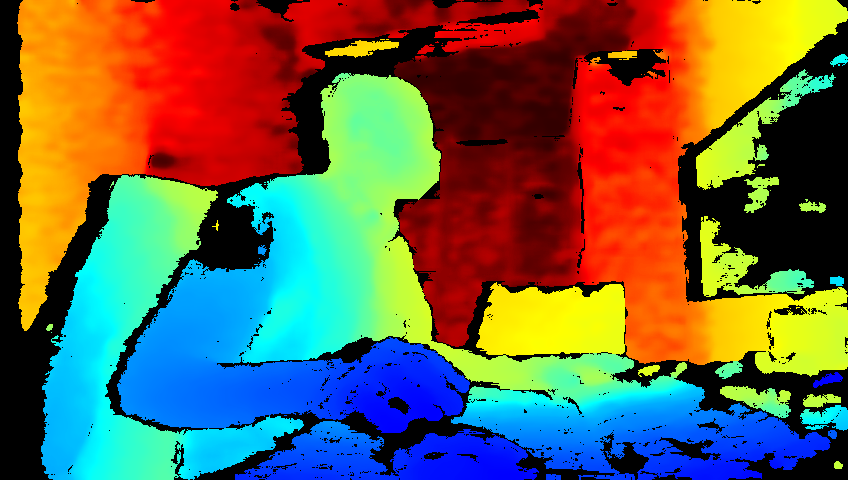

For this thesis, we propose to investigate in-depth the visual or LiDAR SLAM approach using our state-of-the-art Intel Realsense cameras and light detection and ranging sensors (LiDAR). For this, the following concrete tasks will be focused on:

Tentative Work Plan

- study the concept of visual or LiDAR-based SLAM as well as its application in the survey of an unknown environment.

- 2D/3D mapping in both static and dynamic environments.

- localise the robot in the environment using the adaptive Monte Carlo localization (AMCL) approach.

- write a path planning algorithm to navigate the robot from starting point to the destination avoiding collision with obstacles.

- real-time experimentation, simulation (MATLAB, ROS & Gazebo, Rviz, C/C++, Python etc.) and validation.

About the Laboratory

- additive manufacturing unit (3D and laser printing technologies).

- metallic production workshop.

- robotics unit (mobile robots, robotic manipulators, robotic hands, unmanned aerial vehicles (UAV))

- sensors unit (Intel Realsense (LiDAR, depth and tracking cameras), Inertial Measurement Unit (IMU), OptiTrack cameras etc.)

- electronics and embedded systems unit (Arduino, Raspberry Pi, e.t.c)

Expression of Interest

Students interested in carrying out their Master of Science (M.Sc.) or Bachelor of Science (B.Sc.) thesis on the above topic should immediately contact or visit the Chair of Cyber Physical Systems.

Phone: +43 3842 402 – 1901

Map: click here

References

Localization and Mapping’, http://ais.informatik.uni-freiburg.de/teaching/ss12/robotics/slides/12-slam.pdf

Master Thesis

The final master thesis document can be downloaded here.