Supervisors: Univ. -Prof. Dr. Elmar Rückert and Nwankwo Linus M.Sc.

Theoretical difficulty: mid

Practical difficulty: mid

Naturally, humans have the ability to give directions (go front, back, right, left etc) by merely pointing fingers towards the direction in question. This can be done effortlessly without saying a word. However, mimicking or training a mobile robot to understand such gestures is still today an open problem to solve.

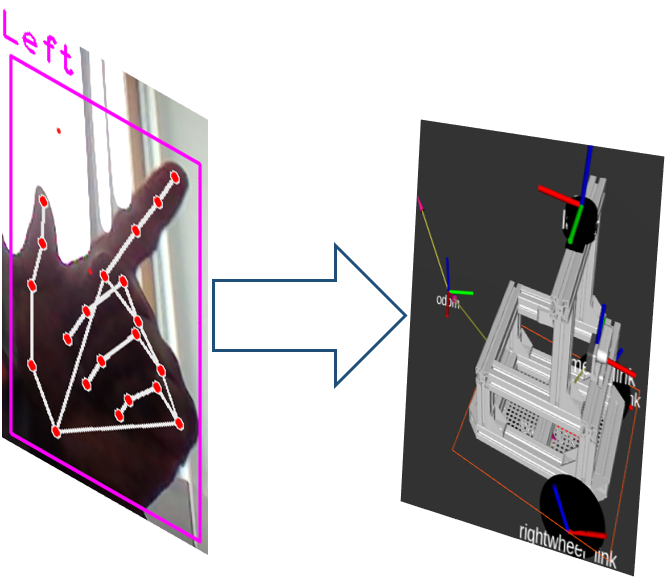

In the context of this thesis, we propose finger-pose based mobile robot navigation to maximize natural human-robot interaction. This could be achieved by observing the human fingers’ Cartesian pose from an

RGB-D camera and translating it to the robot’s linear and angular velocity commands. For this, we will leverage computer vision algorithms and the ROS framework to achieve the objectives.

The prerequisite for this project are basic understanding of Python or C++ programming, OpenCV and ROS.

Tentative work plan

In the course of this thesis, the following concrete tasks will be focused on:

- study the concept of visual navigation of mobile robots

- develop a hand detection and tracking algorithm in Python or C++

- apply the developed algorithm to navigate a simulated mobile robot

- real-time experimentation

- thesis writing

References

- Shuang Li, Jiaxi Jiang, Philipp Ruppel, Hongzhuo Liang, Xiaojian Ma,

Norman Hendrich, Fuchun Sun, Jianwei Zhang, “A Mobile Robot Hand-Arm Teleoperation System by Vision and IMU“, IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), October 25-29, 2020, Las Vegas, NV, USA.