Supervisor: Linus Nwankwo, M.Sc.;

Univ.-Prof. Dr Elmar Rückert

Start date: As soon as possible

Theoretical difficulty: mid

Practical difficulty: High

Abstract

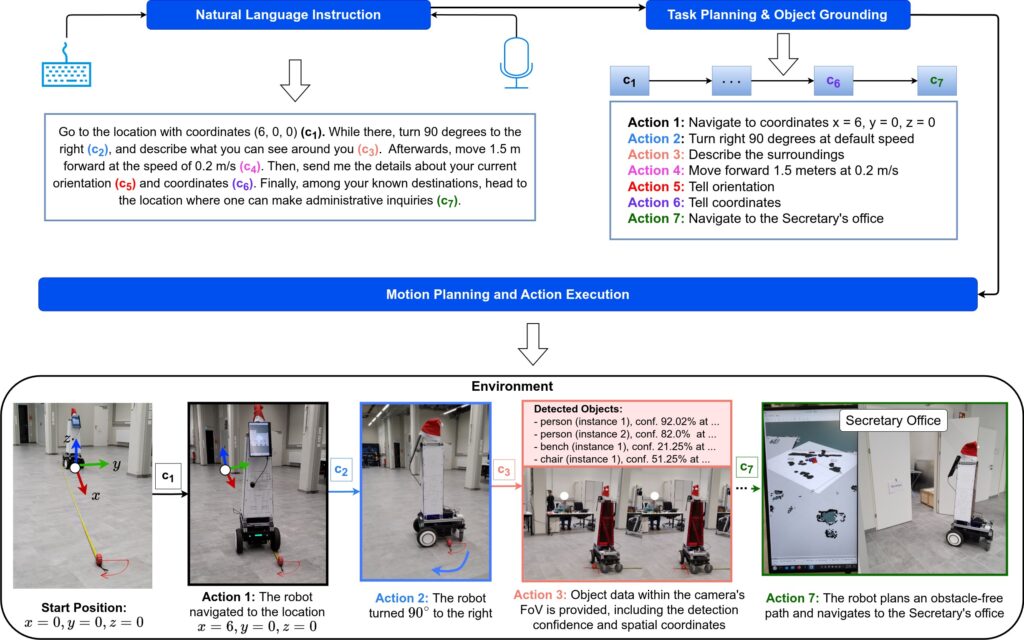

The goal of this thesis is to enhance the method proposed in [1] to enable autonomous robots to effectively interpret open-ended language commands, plan actions, and adapt to dynamic environments.

The scope is limited to grounding the semantic understanding of large-scale pre-trained language and multimodal vision

language models to physical sensor data that enables autonomous agents to execute complex, long-horizon tasks without task-specific programming. The expected outcomes include a unified framework for language-driven autonomy, a method for cross-modal alignment, and real-world validation.

Tentative Work Plan

To achieve the objectives, the following concrete tasks will be focused on:

- Backgrounds and Setup:

- Study LLM-for-robotics papers (e.g., ReLI [1], Code-as-Policies [2], ProgPrompt [3]), vision-language models (CLIP, LLaVA).

- Set up a ROS/Isaac Sim simulation environment and build a robot model (URDF) for the simulation (optional if you wish to use an existing one).

- Familiarise with how LLMs and VLMs can be grounded for short-horizon robotic tasks (e.g., “Move towards the {color} block near the {object}”), in static environments.

- Recommended programming tools: C++, Python, Matlab.

- Modular Pipeline Design:

- Speech/Text (Task Instruction) ⇾ LLM (Task Planning) ⇾ CLIP (Object Grounding) ⇾ Motion Planner (e.g., move towards the {colour} block near the {object}) ⇾ Execution (In simulation or real-world environment).

- Intermediate Presentation:

- Present the results of your background study or what you must have done so far.

- Detailed planning of the next steps.

- Implementation & Real-World Testing (If Possible):

- Test the implemented pipeline with a Gazebo-simulated quadruped or differential drive robot.

- Perform real-world testing of the developed framework with our Unitree Go1 quadruped robot or with our Segway RMP 220 Lite robot.

- Analyse and compare the model’s performance in real-world scenarios versus simulations with the different LLMs and VLMs pipelines.

- Validate with 50+ language commands in both simulation and the real world.

- Optimise the Pipeline for Optimal Performance and Efficiency (Optional):

- Validate the model to identify bottlenecks within the robot’s task environment.

- Documentation and Thesis Writing:

- Document the entire process, methodologies, and tools used.

- Analyse and interpret the results.

- Draft the thesis, ensuring that the primary objectives are achieved.

- Chapters: Introduction, Background (LLMs/VLMs in robotics), Methodology, Results, Conclusion.

- Deliverables: Code repository, simulation demo video, thesis document.

- Research Paper Writing (optional)