Univ.-Prof. Dr. Elmar Rueckert was teaching this course at the University of Luebeck in the summer semester 2020.

Teaching Assistant:

Honghu Xue, M.Sc.

Language:

English only

Course Details

The lecture Reinforcement Learning belongs to the Module Robot Learning (RO4100).

In the winter semester, Prof. Dr. Elmar Rueckert is teaching the course Probabilistic Machine Learning – PML (RO5101 T).

In the summer semester, Prof. Dr. Elmar Rueckert is teaching the course Reinforcement Learning – RL (RO4100 T).

Important Remarks

- Students will receive a single grade for the Module Robot Learning (RO4100) based on the average grade of PML and RL (rounded down in favor of the students).

- This course is organized through online lectures and exercises. Details to the organizations will be discussed in our

FIRST MEETING: 17.04.2020 12:15-13:45

using the WEBEX tool. Please follow the instructions of the ITSC here to setup your computer. Click on the links to create a google calendar event, joint the WEBEX meeting or to access the online slides.

Dates & Times of the Online Webex Meetings

- Lectures are organized on FRIDAYS, 12:15-13:45, WEBEX Link

- Exercises are organized on THURSDAYS, 09:15-10:00, WEBEX Link

Course description

- Introduction to Robotics and Reinforcement Learning (Refresher on Robotics, kinematics, model learning and learning feedback control strategies).

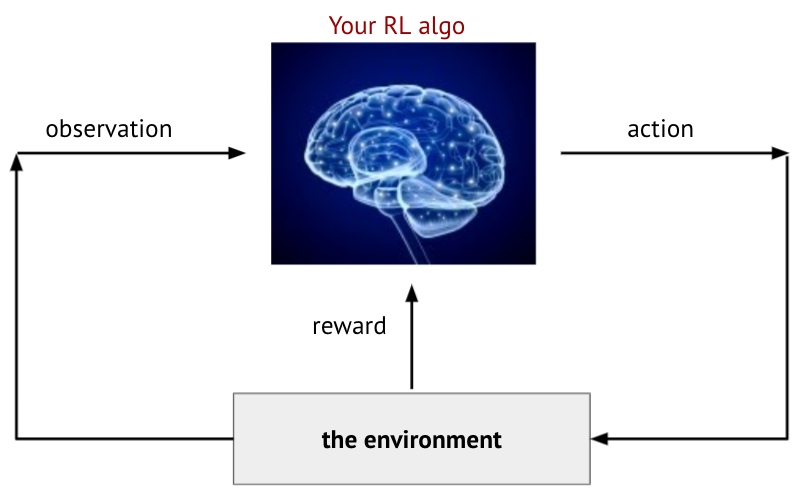

- Foundations of Decision Making (Reward Hypothesis, Markov Property, Markov Reward Process, Value Iteration, Markov Decision Process, Policy Iteration, Bellman Equation, Link to Optimal Control).

- Principles of Reinforcement Learning (Exploration and Exploitation strategies, On & Off-policy learning, model-free and model-based policy learning, Algorithmic principles: Q-Learning, SARSA, (Multi-step) TD-Learning, Eligibility Traces).

- Deep Reinforcement Learning (Introduction to Deep Networks, Stochastic Gradient Descent, Function Approximation, Fitted Q-Iteration, (Double) Deep Q-Learning, Policy-Gradient approaches, Recent research results in Stochastic Deep Neural Networks).

The learning objectives / qualifications are

- Students get a comprehensive understanding of basic decision making theories, assumptions and methods.

- Students learn to analyze the challenges in a reinforcement learning application and to identify promising learning approaches.

- Students will understand the difference between deterministic and probabilistic policies and can define underlying assumptions and requirements for learning them.

- Students understand and can apply advanced policy gradient methods to real world problems.

- Students know how to analyze the learning results and improve the policy learner parameters.

- Students understand how the basic concepts are used in current state of the art research in robot reinforcement learning and in deep neural networks.

Follow this link to register for the course: https://moodle.uni-luebeck.de

Requirements

Basic knowledge in Machine Learning and Neural Networks is required. It is highly recommended to attend any of (but not restricted to) the following courses Probabilistic Machine Learning (RO 5101 T), Artificial Intelligence II (CS 5204 T), Machine Learning (CS 5450), Medical Deep Learning (CS 4374) prior to attending this course. The students will also experiment with state-of-the-art Reinforcement Learning (RL) methods on benchmark RL simulator (OpenAI Gym, Pybullet), which requires strong Python programming skills and knowledge on Pytorch is preferred. All assignment related materials have been tested on a windows machine (Win10 platform).

Grading

The course grades will be computed solely from submitted student reports of six assignments. The reports and the code have to be submitted (one report per team) to xue@rob.uni-luebeck.de. Please note the list of dates and deadlines below. Each assignment has minimally two-week deadline, some of them are of longer duration.

Please use Latex for writing your report.

Bonus Points

tudents can get Bonus Points (BP) during the lectures when all quiz questions are correctly answered (1 BP per lecture). In the assignments, BPs will be given to the students when optional (and often also challenging) tasks are implemented and discussed.

Materials for the Exercise

The course is accompanied by pieces of course work on policy search for discrete state and action spaces (grid world example), policy learning in continuous spaces using function approximations and policy gradient methods in challenging simulated robotic tasks. The theoretical assignment questions are based on the lecture and also on the first three literature sources listed above. It is strongly recommended to read (or watch) these material in parallel to attending lecture. The assignments will include both written tasks and algorithmic implementations in Python. The tasks will be presented during the exercise sessions. As simulation environment, the OpenAI Gym platform will be used in the project works.

Literature

- Richard S. Sutton, Andrew Barto: Reinforcement Learning: An Introduction second edition. The MIT Press Cambridge, Massachusetts London, England, 2018. Link to the online book (PDF)

- David Silver’s Reinforcement Learning online lecture series. Link to the online video and script

- Sergey Levine’s Deep Reinforcement Learning online lecture series. Link to the online video, Link to the script

- Csaba Szepesvri: Algorithms for Reinforcement Learning. Morgan & Claypool in July 2010.

- B. Siciliano, L. Sciavicco: Robotics: Modelling,Planning and Control, Springer, 2009.

- Puterman, Martin L. Markov decision processes: discrete stochastic dynamic programming. John Wiley & Sons, 2014.

- Szepesvari, Csaba. Algorithms for reinforcement learning (synthesis lectures on artificial intelligence and machine learning). Morgan and Claypool (2010).