Supervisor: Fotios Lygerakis and Prof. Elmar Rueckert

Start Date: 1st March 2023

Theoretical difficulty: low

Practical difficulty: mid

Abstract

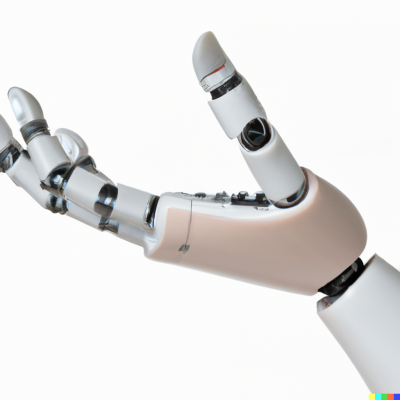

As the interaction with robots becomes an integral part of our daily lives, there is an escalating need for more human-like communication methods with these machines. This surge in robotic integration demands innovative approaches to ensure seamless and intuitive communication. Incorporating sign language, a powerful and unique form of communication predominantly used by the deaf and hard-of-hearing community, can be a pivotal step in this direction.

By doing so, we not only provide an inclusive and accessible mode of interaction but also establish a non-verbal and non-intrusive way for everyone to engage with robots. This evolution in human-robot interaction will undoubtedly pave the way for more holistic and natural engagements in the future.

Thesis

Project Description

The implementation of sign language in human-robot interaction will not only improve the user experience but will also advance the field of robotics and artificial intelligence.

This project will encompass 4 crucial elements.

- Human Gesture Recognition with CNNs and/or Transformers – Recognizing human gestures in sign language through the development of deep learning methods utilizing a camera.

- Letter-level

- Word/Gloss-level

- Chat Agent with Large Language Models (LLMs) – Developing a gloss chat agent.

- Finger Spelling/Gloss gesture with Robot Hand/Arm-Hand –

- Human Gesture Imitation

- Behavior Cloning

- Offline Reinforcement Learning

- Software Engineering – Create a seamless human-robot interaction framework using sign language.

- Develop a ROS-2 framework

- Develop a robot digital twin on simulation

- Human-Robot Interaction Evaluation – Evaluate and adopt the more human-like methods for more human-like interaction with a robotic signer.