Ph.D. Student at the Montanuniversität Leoben

Short bio: Mr. Vedant Dave started at CPS on 23rd September 2021.

He received his Master degree in Automation and Robotics from Technische Universität Dortmund in 2021 with the study focus on Robotics and Artificial Intelligence. His thesis was entitled “Model-agnostic Reinforcement Learning Solution for Autonomous Programming of Robotic Motion”, which took place at at Mercedes-Benz AG. In the thesis, he implemented Reinforcement learning for the motion planning of manipulators in complex environments. Before that, he did his Research internship at Bosch Center for Artificial Intelligence, where he worked on Probabilistic Movement Primitives on Riemannian Manifolds.

Research Interests

- Information Theoretic Reinforcement Learning

- Robust Multimodal Representation Learning

- Unsupervised Skill Discovery

- Movement Primitives

Research Videos

Contact & Quick Links

M.Sc. Vedant Dave

Doctoral Student supervised by Univ.-Prof. Dr. Elmar Rueckert.

Montanuniversität Leoben

Franz-Josef-Straße 18,

8700 Leoben, Austria

Phone: +43 3842 402 – 1903

Email: vedant.dave@unileoben.ac.at

Web Work: CPS-Page

Chat: WEBEX

Publications

2025 |

|

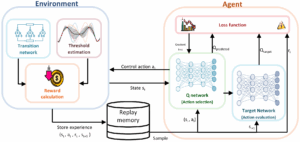

Keshavarz, Sahar; Elmgerbi, Asad; Dave, Vedant; Rückert, Elmar; Thonhauser, Gerhard Deep reinforcement learning for automated decision-making in wellbore construction Journal Article In: Energy Reports, vol. 14, pp. 3514-3528, 2025, ISSN: 2352-4847. @article{KESHAVARZ20253514,The drilling industry continuously seeks cost reduction through improved efficiency, with automation seen as a key solution. The drilling industry continuously seeks cost reduction through improved efficiency, with automation viewed as a key enabler. However, due to the complexity of drilling operations, uncertainty in subsurface conditions, and limitations in real-time data, achieving reliable autonomy remains a major challenge. While physics-based models support automation, they often face limitations under real-time constraints and may struggle to adapt effectively in the presence of uncertain or incomplete data. This study contributes to automation efforts by employing Reinforcement Learning (RL) to model hole conditioning, an essential part of drilling operation. Using a Q-learning approach, the RL agent optimizes operational decisions in real time, adapting based on environmental feedback. This artificial intelligence (AI) -driven agent identifies the ideal sequence of actions for circulation, reaming, and washing, maximizing operational safety and efficiency by aligning with target parameters while navigating operational constraints. The RL model decisions were benchmarked against real-case actions, demonstrating that the agent strategy can outperform expert choices in several areas. Specifically, the RL model provided better solutions in three key examples: avoiding poor hole cleaning, lowering the operational time, and preventing wellbore stability issues. The proposed system contributes to the growing body of research applying deep reinforcement learning for automated hole conditioning, representing an innovative engineering application for AI. This approach not only enhances real-time decision-making capabilities but also establishes a foundation for further automation in well construction, integrating engineering requirements with advanced AI-driven strategies. Through the combination of AI and practical engineering design, this work advances both automation and safety in drilling operations, signaling a promising step forward for future developments in wellbore construction. |  |

Dave, Vedant; Özdenizci, Ozan; Rückert, Elmar Learning Robust Representations for Visual Reinforcement Learning via Task-Relevant Mask Sampling Journal Article In: Transactions on Machine Learning Research, 2025. @article{dave2025learning, |  |

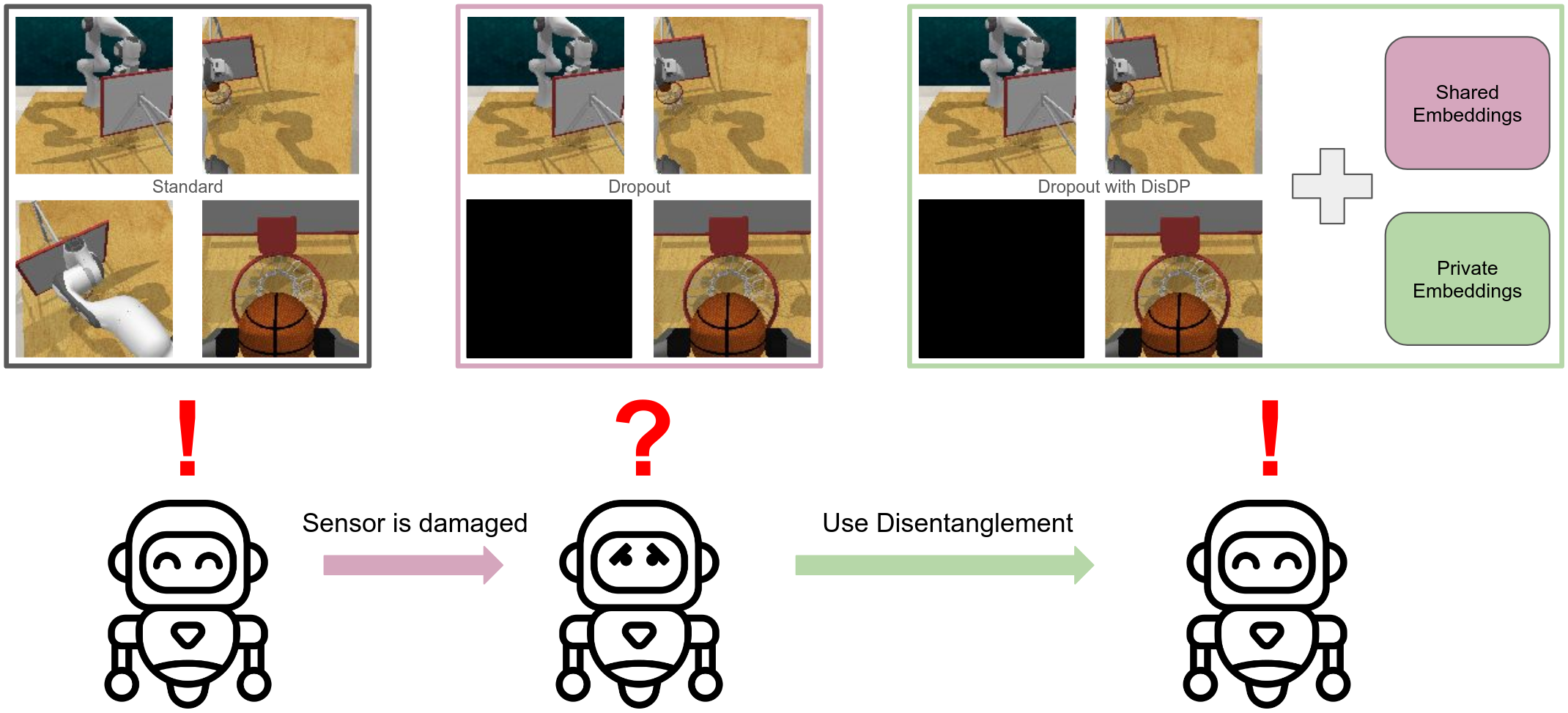

Vanjani, Pankhuri; Mattes, Paul; Li, Maximilian Xiling; Dave, Vedant; Lioutikov, Rudolf DisDP: Robust Imitation Learning via Disentangled Diffusion Policies Proceedings Article In: Reinforcement Learning Conference (RLC), Reinforcement Learning Journal, 2025. @inproceedings{dave2025disdp, |  |

Dave, Vedant; Rueckert, Elmar Skill Disentanglement in Reproducing Kernel Hilbert Space Proceedings Article In: Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), pp. 16153-16162, 2025. @inproceedings{Dave2025bb,Unsupervised Skill Discovery aims at learning diverse skills without any extrinsic rewards and leverage them as prior for learning a variety of downstream tasks. Existing approaches to unsupervised reinforcement learning typically involve discovering skills through empowerment-driven techniques or by maximizing entropy to encourage exploration. However, this mutual information objective often results in either static skills that discourage exploration or maximise coverage at the expense of non-discriminable skills. Instead of focusing only on maximizing bounds on f-divergence, we combine it with Integral Probability Metrics to maximize the distance between distributions to promote behavioural diversity and enforce disentanglement. Our method, Hilbert Unsupervised Skill Discovery (HUSD), provides an additional objective that seeks to obtain exploration and separability of state-skill pairs by maximizing the Maximum Mean Discrepancy between the joint distribution of skills and states and the product of their marginals in Reproducing Kernel Hilbert Space. Our results on Unsupervised RL Benchmark show that HUSD outperforms previous exploration algorithms on state-based tasks. |  |

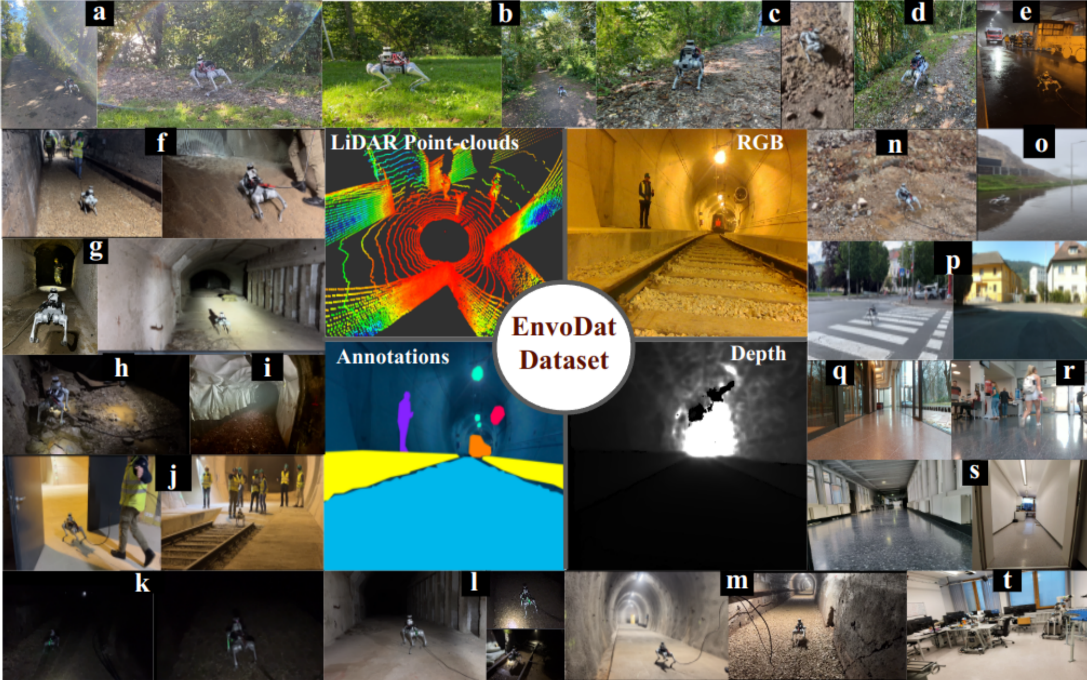

Nwankwo, Linus; Ellensohn, Bjoern; Dave, Vedant; Hofer, Peter; Forstner, Jan; Villneuve, Marlene; Galler, Robert; Rueckert, Elmar EnvoDat: A Large-Scale Multisensory Dataset for Robotic Spatial Awareness and Semantic Reasoning in Heterogeneous Environments Proceedings Article In: IEEE International Conference on Robotics and Automation (ICRA 2025)., 2025. @inproceedings{Nwankwo2025, |  |

2024 |

|

Dave, Vedant; Rueckert, Elmar Denoised Predictive Imagination: An Information-theoretic approach for learning World Models Conference European Workshop on Reinforcement Learning (EWRL), 2024. @conference{dpidave2024,Humans excel at isolating relevant information from noisy data to predict the behavior of dynamic systems, effectively disregarding non-informative, temporally-correlated noise. In contrast, existing reinforcement learning algorithms face challenges in generating noise-free predictions within high-dimensional, noise-saturated environments, especially when trained on world models featuring realistic background noise extracted from natural video streams. We propose a novel information-theoretic approach that learn world models based on minimising the past information and retaining maximal information about the future, aiming at simultaneously learning control policies and at producing denoised predictions. Utilizing Soft Actor-Critic agents augmented with an information-theoretic auxiliary loss, we validate our method's effectiveness on complex variants of the standard DeepMind Control Suite tasks, where natural videos filled with intricate and task-irrelevant information serve as a background. Experimental results demonstrate that our model outperforms nine state-of-the-art approaches in various settings where natural videos serve as dynamic background noise. Our analysis also reveals that all these methods encounter challenges in more complex environments. |  |

Lygerakis, Fotios; Dave, Vedant; Rueckert, Elmar M2CURL: Sample-Efficient Multimodal Reinforcement Learning via Self-Supervised Representation Learning for Robotic Manipulation Proceedings Article In: IEEE International Conference on Ubiquitous Robots (UR 2024), IEEE 2024. @inproceedings{Lygerakis2024, |  |

Dave*, Vedant; Lygerakis*, Fotios; Rueckert, Elmar Multimodal Visual-Tactile Representation Learning through Self-Supervised Contrastive Pre-Training Proceedings Article In: IEEE International Conference on Robotics and Automation (ICRA), pp. 8013-8020, IEEE, 2024, ISBN: 979-8-3503-8457-4, (* equal contribution). @inproceedings{Dave2024b,The rapidly evolving field of robotics necessitates methods that can facilitate the fusion of multiple modalities. Specifically, when it comes to interacting with tangible objects, effectively combining visual and tactile sensory data is key to understanding and navigating the complex dynamics of the physical world, enabling a more nuanced and adaptable response to changing environments. Nevertheless, much of the earlier work in merging these two sensory modalities has relied on supervised methods utilizing datasets labeled by humans. This paper introduces MViTac, a novel methodology that leverages contrastive learning to integrate vision and touch sensations in a self-supervised fashion. By availing both sensory inputs, MViTac leverages intra and inter-modality losses for learning representations, resulting in enhanced material property classification and more adept grasping prediction. Through a series of experiments, we showcase the effectiveness of our method and its superiority over existing state-of-the-art self-supervised and supervised techniques. In evaluating our methodology, we focus on two distinct tasks: material classification and grasping success prediction. Our results indicate that MViTac facilitates the development of improved modality encoders, yielding more robust representations as evidenced by linear probing assessments. https://sites.google.com/view/mvitac/home |  |

2022 |

|

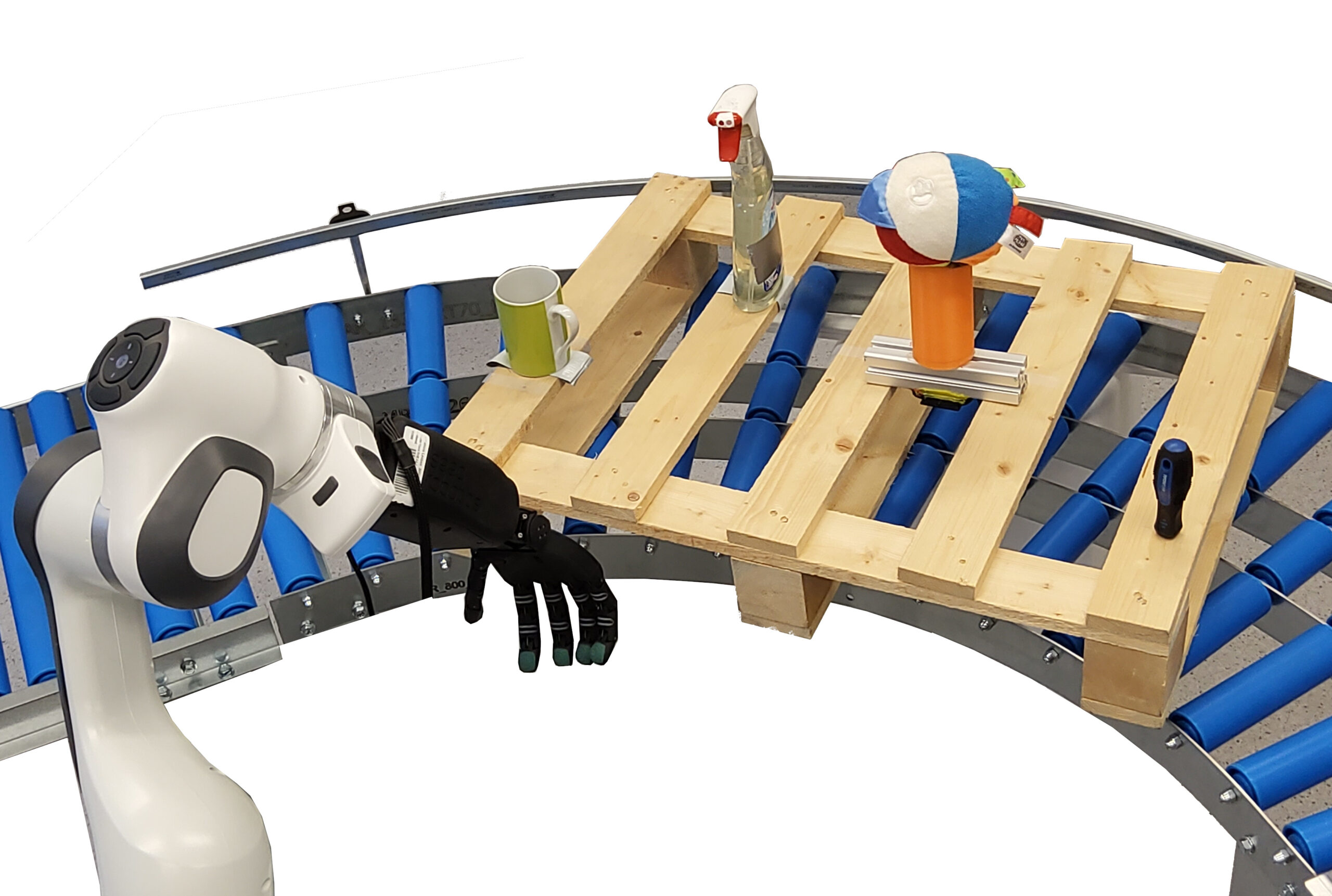

Dave, Vedant; Rueckert, Elmar Can we infer the full-arm manipulation skills from tactile targets? Conference Workshop paper at the International Conference on Humanoids, WS title: Advances in Close Proximity Human-Robot Collaboration, International Conference on Humanoid Robots (Humanoids), 2022. @conference{Dave2022WS,Tactile sensing provides significant information about the state of the environment for performing manipulation tasks. Manipulation skills depends on the desired initial contact points between the object and the end-effector. Based on physical properties of the object, this contact results into distinct tactile responses. We propose Tactile Probabilistic Movement Primitives (TacProMPs), to learn a highly non-linear relationship between the desired tactile responses and the full-arm movement, where we condition solely on the tactile responses to infer the complex manipulation skills. We use a Gaussian mixture model of primitives to address the multimodality in demonstrations. We demonstrate the performance of our method in challenging real-world scenarios. |  |

Dave, Vedant; Rueckert, Elmar Predicting full-arm grasping motions from anticipated tactile responses Proceedings Article In: International Conference on Humanoid Robots (Humanoids), pp. 464-471, IEEE, 2022, ISBN: 979-8-3503-0979-9. @inproceedings{Dave2022,Tactile sensing provides significant information about the state of the environment for performing manipulation tasks. Depending on the physical properties of the object, manipulation tasks can exhibit large variation in their movements. For a grasping task, the movement of the arm and of the end effector varies depending on different points of contact on the object, especially if the object is non-homogeneous in hardness and/or has an uneven geometry. In this paper, we propose Tactile Probabilistic Movement Primitives (TacProMPs), to learn a highly non-linear relationship between the desired tactile responses and the full-arm movement. We solely condition on the tactile responses to infer the complex manipulation skills. We formulate a joint trajectory of full-arm joints with tactile data, leverage the model to condition on the desired tactile response from the non-homogeneous object and infer the full-arm (7-dof panda arm and 19-dof gripper hand) motion. We use a Gaussian Mixture Model of primitives to address the multimodality in demonstrations. We also show that the measurement noise adjustment must be taken into account due to multiple systems working in collaboration. We validate and show the robustness of the approach through two experiments. First, we consider an object with non-uniform hardness. Grasping from different locations require different motion, and results into different tactile responses. Second, we have an object with homogeneous hardness, but we grasp it with widely varying grasping configurations. Our result shows that TacProMPs can successfully model complex multimodal skills and generalise to new situations. |  |

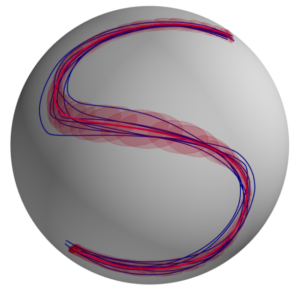

Leonel, Rozo*; Vedant, Dave* Orientation Probabilistic Movement Primitives on Riemannian Manifolds Proceedings Article In: Conference on Robot Learning (CoRL), pp. 11, 2022, (* equal contribution). @inproceedings{Leonel2022,Learning complex robot motions necessarily demands to have models that are able to encode and retrieve full-pose trajectories when tasks are defined in operational spaces. Probabilistic movement primitives (ProMPs) stand out as a principled approach that models trajectory distributions learned from demonstrations. ProMPs allow for trajectory modulation and blending to achieve better generalization to novel situations. However, when ProMPs are employed in operational space, their original formulation does not directly apply to full-pose movements including rotational trajectories described by quaternions. This paper proposes a Riemannian formulation of ProMPs that enables encoding and retrieving of quaternion trajectories. Our method builds on Riemannian manifold theory, and exploits multilinear geodesic regression for estimating the ProMPs parameters. This novel approach makes ProMPs a suitable model for learning complex full-pose robot motion patterns. Riemannian ProMPs are tested on toy examples to illustrate their workflow, and on real learning-from-demonstration experiments. |  |