Publication List with Images

2025 |

|

Dave, Vedant; Rueckert, Elmar Skill Disentanglement in Reproducing Kernel Hilbert Space Proceedings Article In: Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), pp. 16153-16162, 2025. Abstract | Links | BibTeX | Tags: Deep Learning, neural network, Reinforcement Learning, Skill Discovery, Unsupervised Learning @inproceedings{Dave2025bb,Unsupervised Skill Discovery aims at learning diverse skills without any extrinsic rewards and leverage them as prior for learning a variety of downstream tasks. Existing approaches to unsupervised reinforcement learning typically involve discovering skills through empowerment-driven techniques or by maximizing entropy to encourage exploration. However, this mutual information objective often results in either static skills that discourage exploration or maximise coverage at the expense of non-discriminable skills. Instead of focusing only on maximizing bounds on f-divergence, we combine it with Integral Probability Metrics to maximize the distance between distributions to promote behavioural diversity and enforce disentanglement. Our method, Hilbert Unsupervised Skill Discovery (HUSD), provides an additional objective that seeks to obtain exploration and separability of state-skill pairs by maximizing the Maximum Mean Discrepancy between the joint distribution of skills and states and the product of their marginals in Reproducing Kernel Hilbert Space. Our results on Unsupervised RL Benchmark show that HUSD outperforms previous exploration algorithms on state-based tasks. |  |

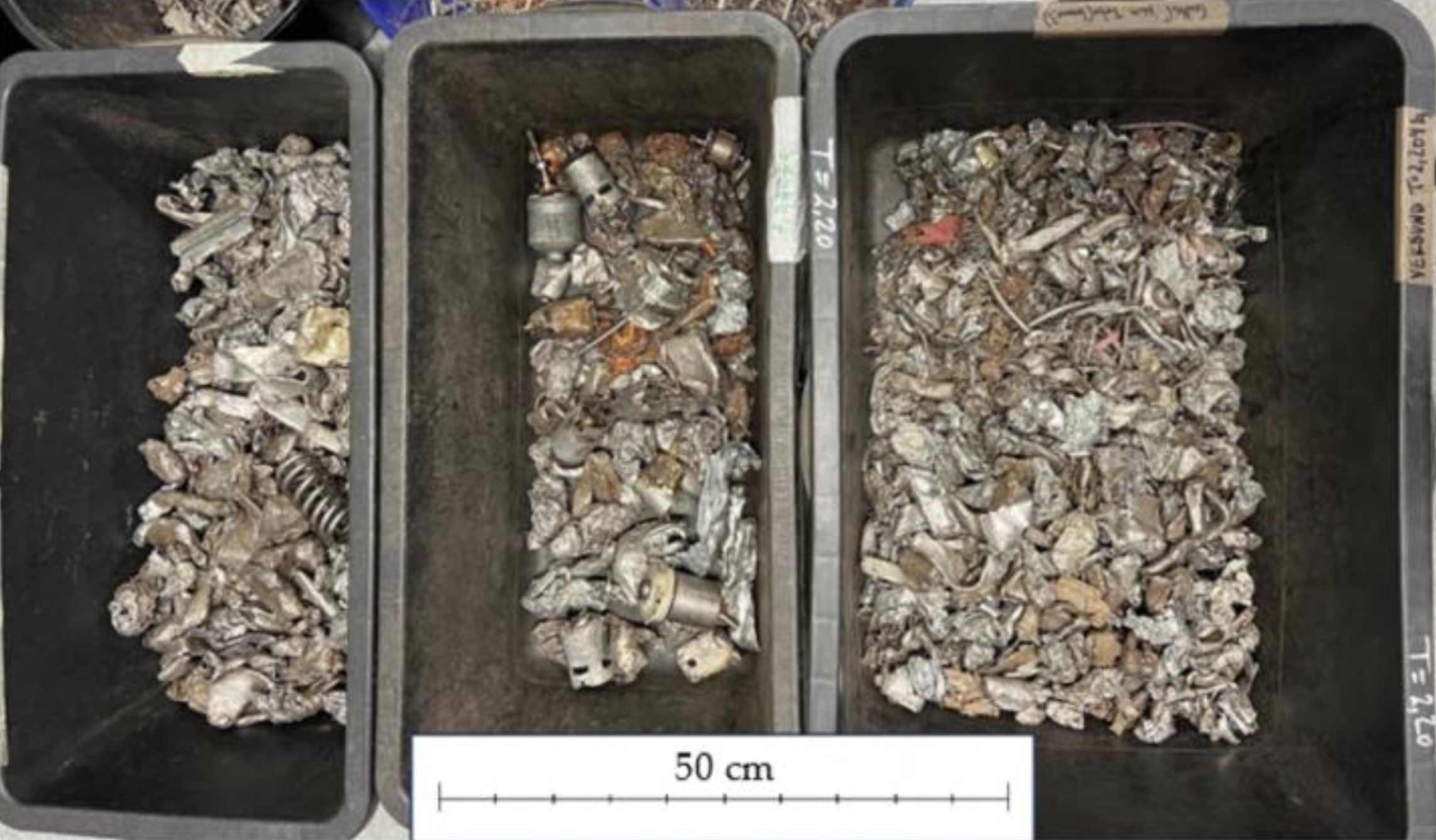

Koinig, Gerald; Neubauer, Melanie; Martinelli, Walter; Radmann, Yves; Kuhn, Nikolai; Fink, Thomas; Rueckert, Elmar; Tischberger-Aldrian, Alexia CNN-based copper reduction in shredded scrap for enhanced electric arc furnace steelmaking Proceedings Article In: International Conference on Optical Characterization of Materials (OCM 2025), pp. 319-328, 2025, ISBN: 9783731514084. Links | BibTeX | Tags: Applied Deep Learning, neural network, Recycling @inproceedings{nokey, |  |

2020 |

|

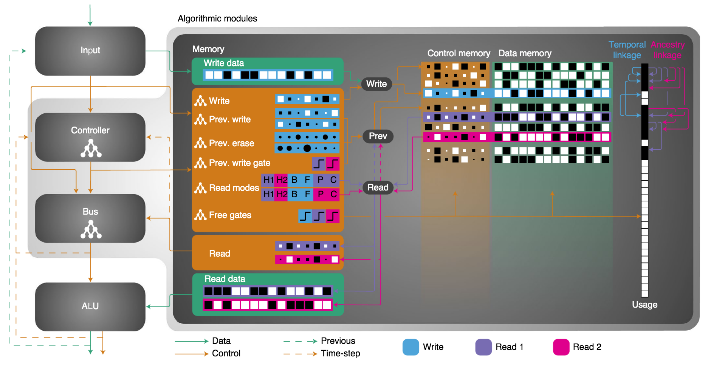

Tanneberg, Daniel; Rueckert, Elmar; Peters, Jan Evolutionary training and abstraction yields algorithmic generalization of neural computers Journal Article In: Nature Machine Intelligence, pp. 1–11, 2020. Links | BibTeX | Tags: neural network, Reinforcement Learning, Transfer Learning @article{Tanneberg2020, |  |

2019 |

|

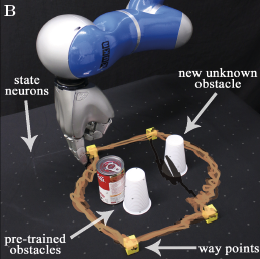

Tanneberg, Daniel; Peters, Jan; Rueckert, Elmar Intrinsic Motivation and Mental Replay enable Efficient Online Adaptation in Stochastic Recurrent Networks Journal Article In: Neural Networks – Elsevier, vol. 109, pp. 67-80, 2019, ISBN: 0893-6080, (Impact Factor of 7.197 (2017)). Links | BibTeX | Tags: neural network, Probabilistic Inference, RNN, spiking @article{Tanneberg2019, |  |

2018 |

|

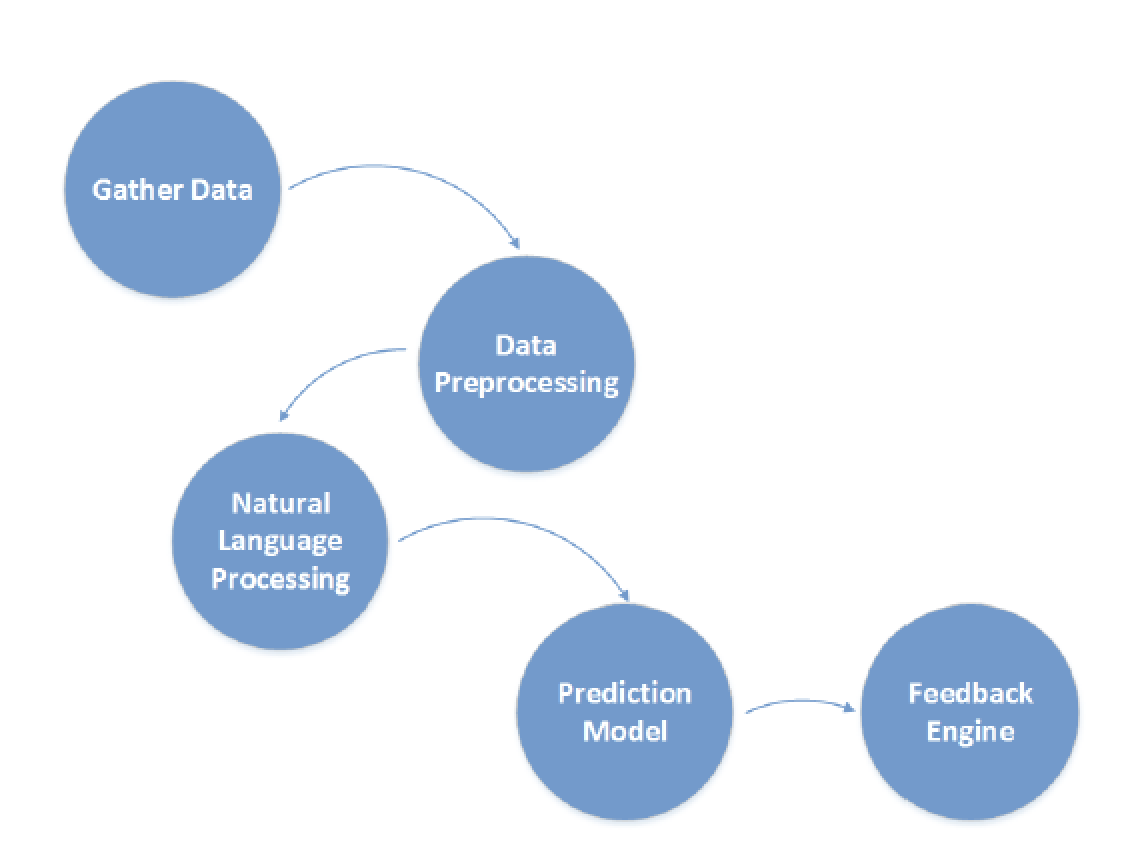

Gondaliya, Kaushikkumar D.; Peters, Jan; Rueckert, Elmar Learning to Categorize Bug Reports with LSTM Networks Proceedings Article In: Proceedings of the International Conference on Advances in System Testing and Validation Lifecycle (VALID)., pp. 6, XPS (Xpert Publishing Services), Nice, France, 2018, ISBN: 978-1-61208-671-2, ( October 14-18, 2018). Links | BibTeX | Tags: Natural Language Processing, neural network, RNN @inproceedings{Gondaliya2018, |  |

2015 |

|

Calandra, Roberto; Ivaldi, Serena; Deisenroth, Marc; Rueckert, Elmar; Peters, Jan Learning Inverse Dynamics Models with Contacts Proceedings Article In: Proceedings of the International Conference on Robotics and Automation (ICRA), 2015. Links | BibTeX | Tags: inverse dynamics, model learning, neural network @inproceedings{Calandra2015, |  |

Compact List without Images

Journal Articles |

Tanneberg, Daniel; Rueckert, Elmar; Peters, Jan Evolutionary training and abstraction yields algorithmic generalization of neural computers Journal Article In: Nature Machine Intelligence, pp. 1–11, 2020. @article{Tanneberg2020, |

Tanneberg, Daniel; Peters, Jan; Rueckert, Elmar Intrinsic Motivation and Mental Replay enable Efficient Online Adaptation in Stochastic Recurrent Networks Journal Article In: Neural Networks – Elsevier, vol. 109, pp. 67-80, 2019, ISBN: 0893-6080, (Impact Factor of 7.197 (2017)). @article{Tanneberg2019, |

Proceedings Articles |

Dave, Vedant; Rueckert, Elmar Skill Disentanglement in Reproducing Kernel Hilbert Space Proceedings Article In: Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), pp. 16153-16162, 2025. @inproceedings{Dave2025bb,Unsupervised Skill Discovery aims at learning diverse skills without any extrinsic rewards and leverage them as prior for learning a variety of downstream tasks. Existing approaches to unsupervised reinforcement learning typically involve discovering skills through empowerment-driven techniques or by maximizing entropy to encourage exploration. However, this mutual information objective often results in either static skills that discourage exploration or maximise coverage at the expense of non-discriminable skills. Instead of focusing only on maximizing bounds on f-divergence, we combine it with Integral Probability Metrics to maximize the distance between distributions to promote behavioural diversity and enforce disentanglement. Our method, Hilbert Unsupervised Skill Discovery (HUSD), provides an additional objective that seeks to obtain exploration and separability of state-skill pairs by maximizing the Maximum Mean Discrepancy between the joint distribution of skills and states and the product of their marginals in Reproducing Kernel Hilbert Space. Our results on Unsupervised RL Benchmark show that HUSD outperforms previous exploration algorithms on state-based tasks. |

Koinig, Gerald; Neubauer, Melanie; Martinelli, Walter; Radmann, Yves; Kuhn, Nikolai; Fink, Thomas; Rueckert, Elmar; Tischberger-Aldrian, Alexia CNN-based copper reduction in shredded scrap for enhanced electric arc furnace steelmaking Proceedings Article In: International Conference on Optical Characterization of Materials (OCM 2025), pp. 319-328, 2025, ISBN: 9783731514084. @inproceedings{nokey, |

Gondaliya, Kaushikkumar D.; Peters, Jan; Rueckert, Elmar Learning to Categorize Bug Reports with LSTM Networks Proceedings Article In: Proceedings of the International Conference on Advances in System Testing and Validation Lifecycle (VALID)., pp. 6, XPS (Xpert Publishing Services), Nice, France, 2018, ISBN: 978-1-61208-671-2, ( October 14-18, 2018). @inproceedings{Gondaliya2018, |

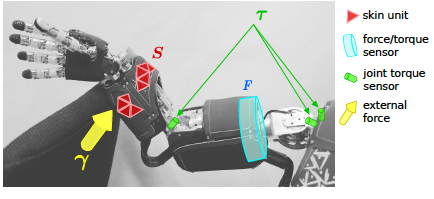

Calandra, Roberto; Ivaldi, Serena; Deisenroth, Marc; Rueckert, Elmar; Peters, Jan Learning Inverse Dynamics Models with Contacts Proceedings Article In: Proceedings of the International Conference on Robotics and Automation (ICRA), 2015. @inproceedings{Calandra2015, |