Abstract

The need for efficient and compact representations of sensory data such as visual and textual has grown significantly due to the exponential growth in the size and complexity of the data. Self-supervised learning techniques, such as autoencoders, contrastive learning, and transformer, have shown significant promise in learning such representations from large unlabeled datasets. This research aims to develop novel self-supervised learning techniques inspired by these approaches to improve the quality and efficiency of unsupervised representation learning.

Description

The study will begin by reviewing the state-of-the-art self-supervised learning techniques and their applications in various domains, including computer vision and natural language processing. Next, a set of experiments will be conducted to develop and evaluate the proposed techniques on standard datasets in these domains.

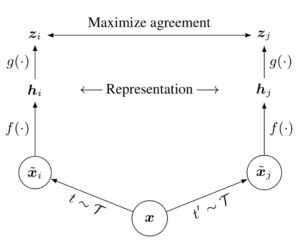

The experiments will focus on learning compact and efficient representations of sensory data using autoencoder-based techniques, contrastive learning, and transformer-based approaches. The performance of the proposed techniques will be evaluated based on their ability to improve the accuracy and efficiency of unsupervised representation learning tasks.

The research will also investigate the impact of different factors such as the choice of loss functions, model architecture, and hyperparameters on the performance of the proposed techniques. The insights gained from this study will help in developing guidelines for selecting appropriate self-supervised learning techniques for efficient and compact representation learning.

Overall, this research will contribute to the development of novel self-supervised learning techniques for efficient and compact representation learning of sensory data. The proposed techniques will have potential applications in various domains, including computer vision, natural language processing, and other sensory data analysis tasks.

Qualifications

- Currently pursuing a Bachelor’s or Master’s degree in Computer Science,

Electrical Engineering, Mechanical Engineering, Mathematics, or related

fields. - Strong programming skills in Python

- Experience with deep learning frameworks such as PyTorch or TensorFlow.

- Good written and verbal communication skills in English.

- (optional) Familiarity with unsupervised learning techniques such as contrastive learning, self-supervised learning, and generative models

Interested?

If this topic excites you you, please contact Fotios Lygerakis by email at fotios.lygerakis@unileoben.ac.at or simple visit us at our chair in the Metallurgie building, 1st floor.