Are you fascinated by the intricate dance of robots and objects? Do you dream of pushing the boundaries of robotic manipulation? If so, this internship is your chance to dive into the heart of robotic innovation!

You can work on this project either by doing a B.Sc or M.Sc. thesis or an internship.

Job Description

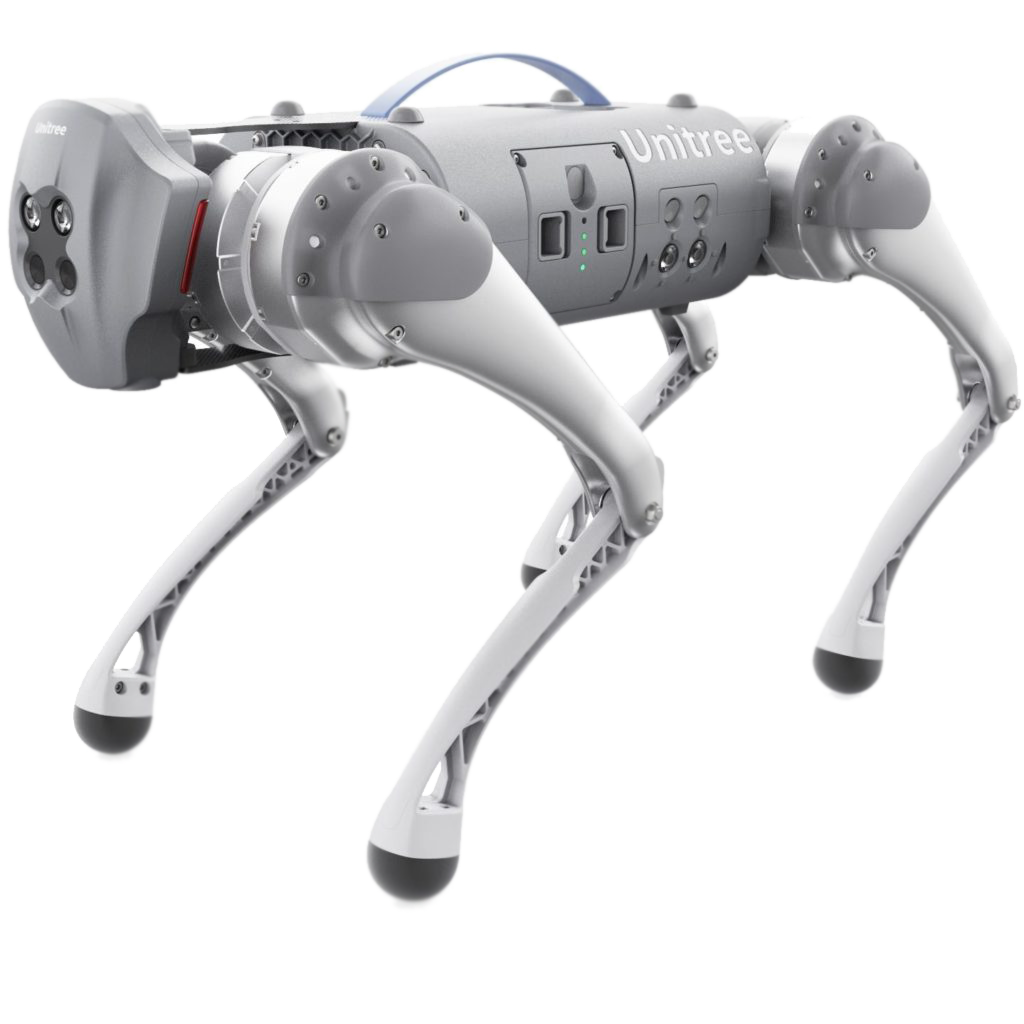

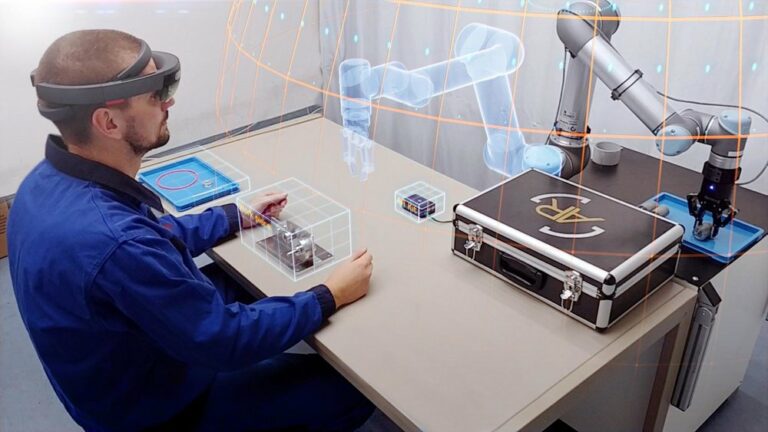

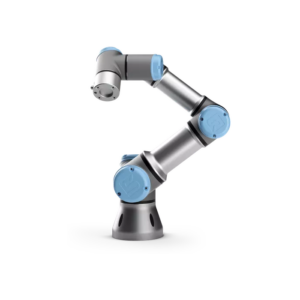

This internship offers a unique opportunity to explore the exciting world of robotic learning. You’ll join our team, working alongside cutting-edge robots like the UR3 and Franka Emika cobots, and advanced grippers like the 2F Adaptive Gripper (Robotiq), the dexterous RH8D Seed Robotics Hand and the LEAP hand. Equipped with tactile sensors, you’ll delve into the world of grasping, manipulation, and interaction with diverse objects using Deep Learning methods.

Start date: Open

Keywords:

- Robot learning

- Robotic manipulation

- Reinforcement Learning

- Sim2Real

- Robot Teleoperation

- Imitation Learning

- Deep learning

- Research

Responsibilities

- Collaborate with researchers to develop and implement novel robotic manipulation learning algorithms in simulation and in real-world.

- Gain hands-on experience programming and controlling robots like the UR3 and Franka Emika cobots.

- Experiment with various grippers like the 2F Adaptive Gripper, the RH8D Seed Robotics Hand and the LEAP hand, exploring their functionalities.

- Develop data fusion methods for vision and tactile sensing.

- Participate in research activities, including data collection, analysis, and documentation.

- Contribute to the development of presentations and reports to effectively communicate research findings.

Qualifications

- Currently pursuing a Bachelor’s or Master’s degree in Computer Science,

Electrical Engineering, Mechanical Engineering, Mathematics or related

fields. - Solid foundation in robotics fundamentals (kinematics, dynamics, control theory).

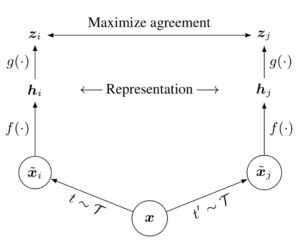

- Solid foundation in machine learning concepts (e.g., supervised learning, unsupervised learning, reinforcement learning, neural networks, etc)

- Strong programming skills in Python and experience with deep learning frameworks such as PyTorch or TensorFlow.

- Excellent analytical and problem-solving skills.

- Effective communication and collaboration skills to work seamlessly within the research team.

- Good written and verbal communication skills in English.

- (optional) Prior experience in robot systems and published work.

Opportunities and Benefits of the Internship

- Gain invaluable hands-on experience with state-of-the-art robots and grippers.

- Work alongside other researchers at the forefront of robot learning.

- Develop your skills in representation learning, reinforcement learning, robot learning and robotics.

- Contribute to novel research that advances the capabilities of robotic manipulation.

- Build your resume and gain experience in a dynamic and exciting field.

Application

Send us your CV accompanied by a letter of motivation at fotios.lygerakis@unileoben.ac.at with the subject: “Internship Application | Robot Learning”

Funding

We will support you during your application for an internship grant. Below we list some relevant grant application details.

CEEPUS grant (European for undergrads and graduates)

Find details on the Central European Exchange Program for University Studies program at https://grants.at/en/ or at https://www.ceepus.info.

In principle, you can apply at any time for a scholarship. However, also your country of origin matters and there exist networks of several countries that have their own contingent.

Ernst Mach Grant (Worldwide for PhDs and Seniors)

Find details on the program at https://grants.at/en/ or at https://oead.at/en/to-austria/grants-and-scholarships/ernst-mach-grant.

Rest Funding Resourses

Apply online at http://www.scholarships.at/