Author: Linus Nwankwo

M.Sc. Thesis – Bernd Burghauser: Benchmarking SLAM and supervised learning methods in challenging real-world environments.

Supervisor: Linus Nwankwo, M.S.c;

Univ.-Prof. Dr Elmar Rückert

Start date: ASAP, e.g., 1st of October 2021

Theoretical difficulty: low

Practical difficulty: high

Introduction

The SLAM problem as described in [3] is the problem of building a map of the environment while simultaneously estimating the robot’s position relative to the map given noisy sensor observations. Probabilistically, the problem is often approached by leveraging the Bayes formulation due to the uncertainties in the robot’s motions and observations.

SLAM has found many applications not only in navigation, augmented reality, and autonomous vehicles e.g. self-driving cars, and drones but also in indoor & outdoor delivery robots, intelligent warehousing etc. While many possible solutions have been presented in the literature to solve the SLAM problem, in challenging real-world scenarios with features or geometrically constrained characteristics, the reality is far different.

Some of the most common challenges with SLAM are the accumulation of errors over time due to inaccurate pose estimation (localization errors) while the robot moves from the start location to the goal location; the high computational cost for image, point cloud processing and optimization [1]. These challenges can cause a significant deviation from the actual values and at the same time leads to inaccurate localization if the image and cloud processing is not processed at a very high frequency [2]. This would also impair the frequency with which the map is updated and hence the overall efficiency of the SLAM algorithm will be affected.

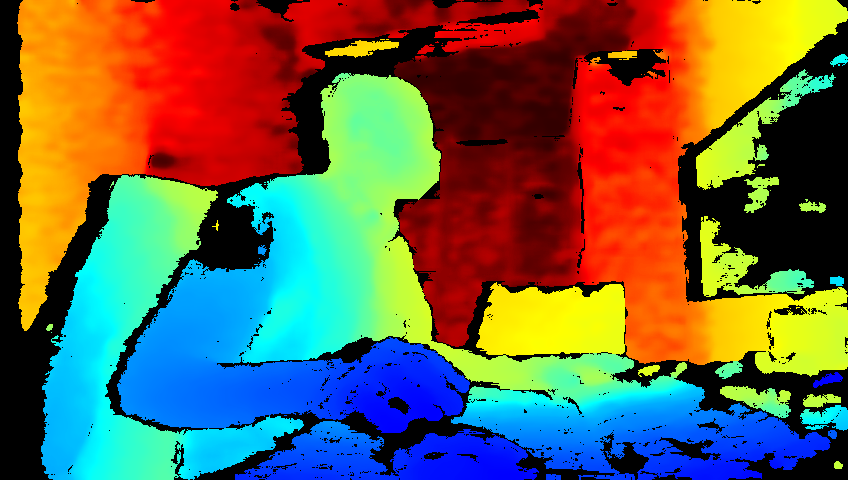

For this thesis, we propose to investigate in-depth the visual or LiDAR SLAM approach using our state-of-the-art Intel Realsense cameras and light detection and ranging sensors (LiDAR). For this, the following concrete tasks will be focused on:

Tentative Work Plan

- study the concept of visual or LiDAR-based SLAM as well as its application in the survey of an unknown environment.

- 2D/3D mapping in both static and dynamic environments.

- localise the robot in the environment using the adaptive Monte Carlo localization (AMCL) approach.

- write a path planning algorithm to navigate the robot from starting point to the destination avoiding collision with obstacles.

- real-time experimentation, simulation (MATLAB, ROS & Gazebo, Rviz, C/C++, Python etc.) and validation.

About the Laboratory

- additive manufacturing unit (3D and laser printing technologies).

- metallic production workshop.

- robotics unit (mobile robots, robotic manipulators, robotic hands, unmanned aerial vehicles (UAV))

- sensors unit (Intel Realsense (LiDAR, depth and tracking cameras), Inertial Measurement Unit (IMU), OptiTrack cameras etc.)

- electronics and embedded systems unit (Arduino, Raspberry Pi, e.t.c)

Expression of Interest

Students interested in carrying out their Master of Science (M.Sc.) or Bachelor of Science (B.Sc.) thesis on the above topic should immediately contact or visit the Chair of Cyber Physical Systems.

Phone: +43 3842 402 – 1901

Map: click here

References

Localization and Mapping’, http://ais.informatik.uni-freiburg.de/teaching/ss12/robotics/slides/12-slam.pdf

Master Thesis

The final master thesis document can be downloaded here.

M.Sc. Thesis, Adiole Promise Emeziem: Language-Grounded Robot Autonomy through Large Language Models and Multimodal Perception

Supervisor: Linus Nwankwo, M.Sc.;

Univ.-Prof. Dr Elmar Rückert

Start date: As soon as possible

Theoretical difficulty: mid

Practical difficulty: High

Abstract

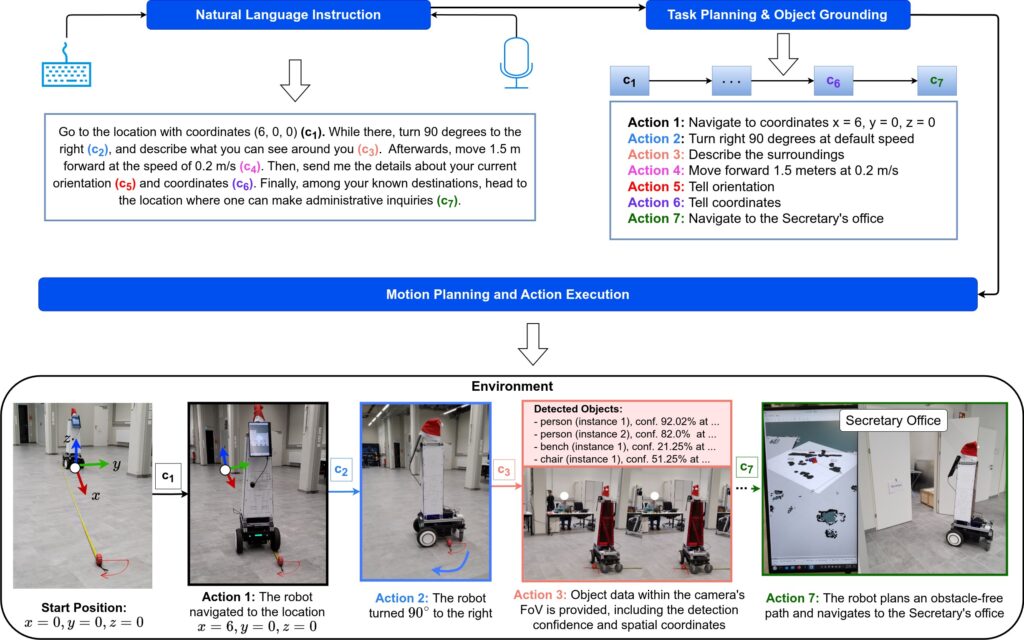

The goal of this thesis is to enhance the method proposed in [1] to enable autonomous robots to effectively interpret open-ended language commands, plan actions, and adapt to dynamic environments.

The scope is limited to grounding the semantic understanding of large-scale pre-trained language and multimodal vision

language models to physical sensor data that enables autonomous agents to execute complex, long-horizon tasks without task-specific programming. The expected outcomes include a unified framework for language-driven autonomy, a method for cross-modal alignment, and real-world validation.

Tentative Work Plan

To achieve the objectives, the following concrete tasks will be focused on:

- Backgrounds and Setup:

- Study LLM-for-robotics papers (e.g., ReLI [1], Code-as-Policies [2], ProgPrompt [3]), vision-language models (CLIP, LLaVA).

- Set up a ROS/Isaac Sim simulation environment and build a robot model (URDF) for the simulation (optional if you wish to use an existing one).

- Familiarise with how LLMs and VLMs can be grounded for short-horizon robotic tasks (e.g., “Move towards the {color} block near the {object}”), in static environments.

- Recommended programming tools: C++, Python, Matlab.

- Modular Pipeline Design:

- Speech/Text (Task Instruction) ⇾ LLM (Task Planning) ⇾ CLIP (Object Grounding) ⇾ Motion Planner (e.g., move towards the {colour} block near the {object}) ⇾ Execution (In simulation or real-world environment).

- Intermediate Presentation:

- Present the results of your background study or what you must have done so far.

- Detailed planning of the next steps.

- Implementation & Real-World Testing (If Possible):

- Test the implemented pipeline with a Gazebo-simulated quadruped or differential drive robot.

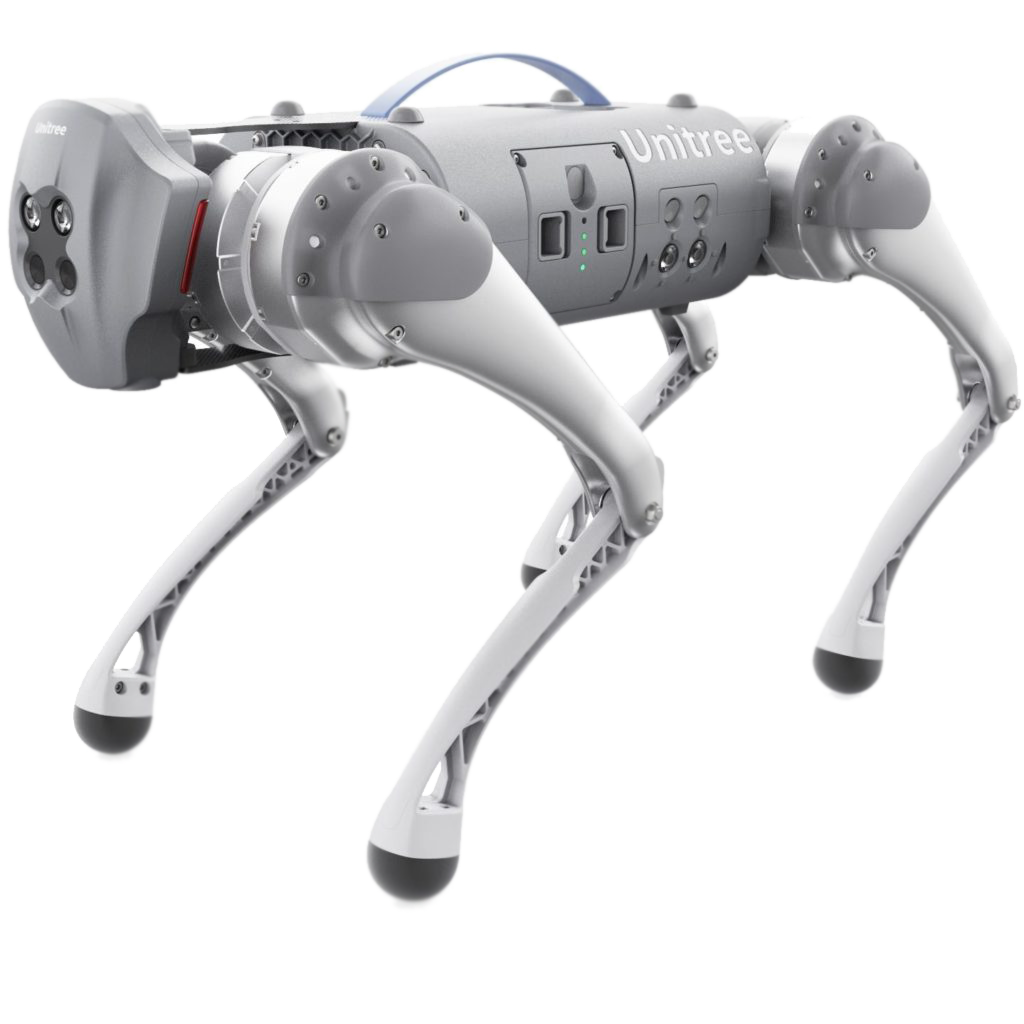

- Perform real-world testing of the developed framework with our Unitree Go1 quadruped robot or with our Segway RMP 220 Lite robot.

- Analyse and compare the model’s performance in real-world scenarios versus simulations with the different LLMs and VLMs pipelines.

- Validate with 50+ language commands in both simulation and the real world.

- Optimise the Pipeline for Optimal Performance and Efficiency (Optional):

- Validate the model to identify bottlenecks within the robot’s task environment.

- Documentation and Thesis Writing:

- Document the entire process, methodologies, and tools used.

- Analyse and interpret the results.

- Draft the thesis, ensuring that the primary objectives are achieved.

- Chapters: Introduction, Background (LLMs/VLMs in robotics), Methodology, Results, Conclusion.

- Deliverables: Code repository, simulation demo video, thesis document.

- Research Paper Writing (optional)

References

BSc. Thesis, Merisa Salkic – Smart conversations: Enhancing robotic task execution through advanced language models

Supervisor: Linus Nwankwo, M.Sc.;

Univ.-Prof. Dr Elmar Rückert

Start date: As soon as possible

Theoretical difficulty: mid

Practical difficulty: High

Abstract

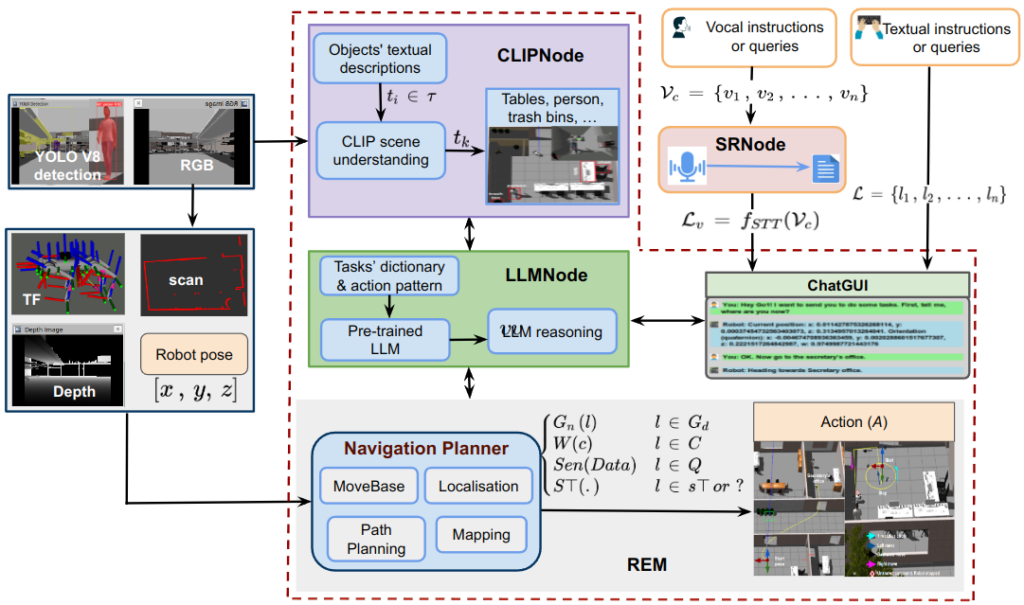

In this thesis, we aim to enhance the method proposed in [1] for robust natural human-autonomous agent interaction through verbal and textual conversations.

The primary focus would be to develop a system that can enhance the natural language conversations, understand the

semantic context of the robot’s task environment, and abstract this information into actionable commands or queries. This will be achieved by leveraging the capabilities of pre-trained large language models (LLMs) – GPT-4, visual language models (VLMs) – CLIP, and audio language models (ALMs) – AudioLM.

Tentative Work Plan

To achieve the objectives, the following concrete tasks will be focused on:

- Initialisation and Background:

- Study the concept of LLMs, VLMs, and ALMs.

- How LLMs, VLMs, and ALMs can be grounded for autonomous robotic tasks.

- Familiarise yourself with the methods at the project website – https://linusnep.github.io/MTCC-IRoNL/.

- Setup and Familiarity with the Simulation Environment

- Build a robot model (URDF) for the simulation (optional if you wish to use the existing one).

- Set up the ROS framework for the simulation (Gazebo, Rviz).

- Recommended programming tools: C++, Python, Matlab.

- Coding

- Improve the existing code of the method proposed in [1] to incorporate the aforementioned modalities—the code to be provided to the student.

- Integrate other LLMs e.g., LLaMA and VLMs e.g., GLIP modalities into the framework and compare their performance with the baseline (GPT-4 and CLIP).

- Intermediate Presentation:

- Present the results of your background study or what you must have done so far.

- Detailed planning of the next steps.

- Simulation & Real-World Testing (If Possible):

- Test your implemented model with a Gazebo-simulated quadruped or differential drive robot.

- Perform the real-world testing of the developed framework with our Unitree Go1 quadruped robot or with our Segway RMP 220 Lite robot.

- Analyse and compare the model’s performance in real-world scenarios versus simulations with the different LLMs and VLMs pipelines.

- Optimize the Framework for Optimal Performance and Efficiency (Optional):

- Validate the model to identify bottlenecks within the robot’s task environment.

- Documentation and Thesis Writing:

- Document the entire process, methodologies, and tools used.

- Analyse and interpret the results.

- Draft the project report or thesis, ensuring that the primary objectives are achieved.

- Research Paper Writing (optional)

Related Work

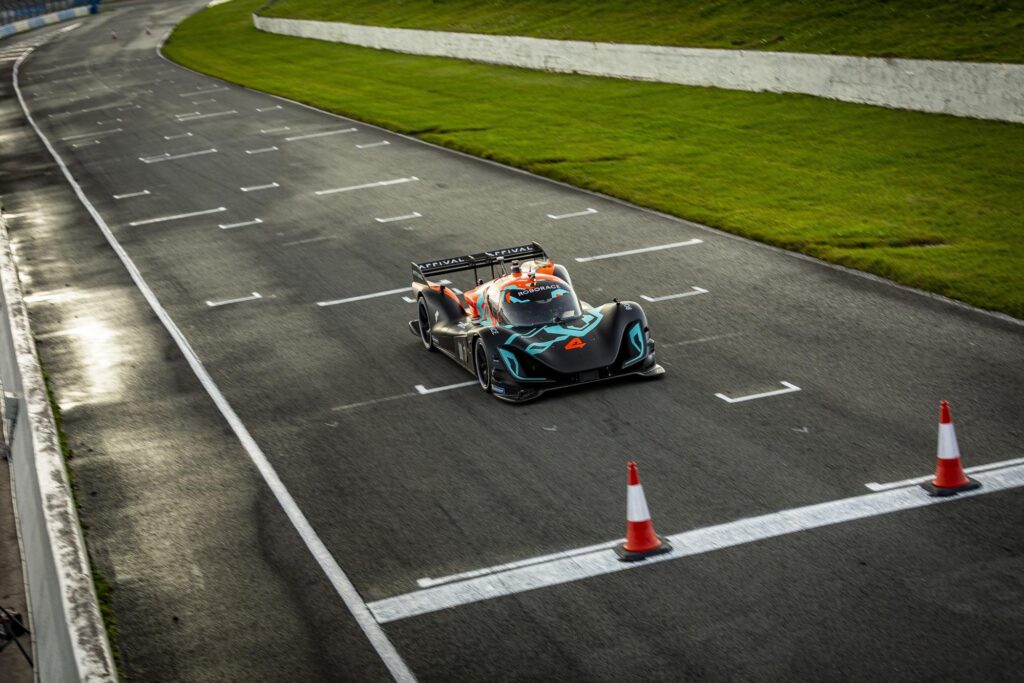

B.Sc. Thesis – Philipp Zeni – Precision in Motion: ML-Enhanced Race Course Identification for Formula Student Racing

Supervisor: Linus Nwankwo, M.Sc.;

Univ.-Prof. Dr Elmar Rückert

Start date: 30th October 2023

Theoretical difficulty: mid

Practical difficulty: High

Abstract

This thesis explores machine learning techniques for analysing onboard recordings from the TU Graz Racing Team, a prominent Formula Student team. The main goal is to design and train an end-to-end machine learning model to autonomously discern race courses based on sensor observations.

Further, this thesis seeks to address the following research questions:

- Can track markers (cones) be reliably detected and segmented from onboard recordings?

- Does the delineated racing track provide an adequate level of accuracy to support autonomous driving, minimizing the risk of accidents?

- How well does a neural network trained on simulated data adapt to real-world situations?

- Can the neural network ensure real-time processing in high-speed scenarios surpassing 100 km/h?

Tentative Work Plan

To achieve the objectives, the following concrete tasks will be focused on:

- Thesis initialisation and literature review:

- Define the scope and boundaries of your work.

- Study the existing project in [1] and [2] to identify gaps and methodologies.

- Setup and familiarize with the simulation environment

- Build the car model (URDF) for the simulation (optional if you wish to use the existing one)

- Setup the ROS framework for the simulation (Gazebo, Rviz)

- Recommended programming tools: C++, Python, Matlab

- Data acquisition and preprocessing (3D Lidar and RGB-D data)

- Collect onboard recordings and sensor data from the TU Graz Racing track.

- Augment the data with additional simulated recordings using ROS, if necessary.

- Preprocess and label the data for machine learning (ML). This includes segmenting tracks, markers, and other relevant features.

- Intermediate presentation:

- Present the results of the literature study or what has been done so far

- Detailed planning of the next steps

- ML Model Development:

- Design the initial neural network architecture.

- Train the model using the preprocessed data.

- Evaluate model performance using metrics like accuracy, precision, recall, etc.

- Iteratively refine the model based on the evaluation results.

- Real-world Testing (If Possible):

- Implement the trained model on a real vehicle’s onboard computer.

- Test the vehicle in a controlled environment, ensuring safety measures are in place.

- Analyze and compare the model’s performance in real-world scenarios versus simulations.

- Optimization for Speed and Efficiency (Optional):

- Validate the model to identify bottlenecks.

- Optimize the neural network for real-time performance, especially for high-speed scenarios

- Documentation and B.Sc. thesis writing:

- Document the entire process, methodologies, and tools used.

- Analyze and interpret the results.

- Draft the thesis, ensuring that at least two of the research questions are addressed.

- Research paper writing (optional)

Related Work

[4] Z. Lu, C. Zhang, H. Zhang, Z. Wang, C. Huang and Y. Ji, “Deep Reinforcement Learning Based Autonomous Racing Car Control With Priori Knowledge,” 2021 China Automation Congress (CAC), Beijing, China, 2021, pp. 2241-2246, doi: 10.1109/CAC53003.2021.9728289.

[5] J. Kabzan, L. Hewing, A. Liniger and M. N. Zeilinger, “Learning-Based Model Predictive Control for Autonomous Racing,” in IEEE Robotics and Automation Letters, vol. 4, no. 4, pp. 3363-3370, Oct. 2019, doi: 10.1109/LRA.2019.2926677.M.Sc. Thesis, Stefan Maintinger – Map-based and map-less mobile navigation via deep reinforcement learning in dynamic environments

Supervisor: Linus Nwankwo, M.Sc.;

Univ.-Prof. Dr Elmar Rückert

Start date: 5th September 2022

Theoretical difficulty: mid

Practical difficulty: mid

Abstract

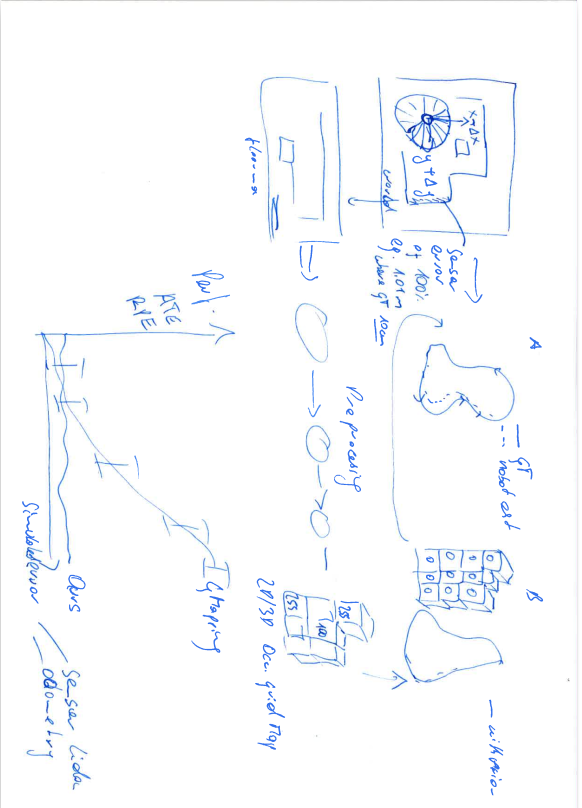

For over 20 years today, the simultaneous localisation and mapping (SLAM) approach has been widely used to achieve autonomous navigation objectives. The SLAM problem is the problem of building a map of the environment while simultaneously estimating the robot’s position relative to the map given noisy sensor observations and a series of control data. Recently, the

mapless-based approach with deep reinforcement learning has been proposed. For this approach, the agent (robot) learns the navigation policy given only sensor data and a series of control data without a prior map of the task environment. In the context of this thesis, we will evaluate the performance of both approaches in a crowded dynamic environment using our differential drive open-source open-shuttle mobile robot.

Tentative Work Plan

To achieve our objective, the following concrete tasks will be focused on:

- Literature research and a general understanding of the field

- mobile robotics and industrial use cases

- Overview of map-based autonomous navigation (SLAM & Path planning)

- Overview of mapless-based autonomous navigation approach with deep reinforcement learning

- Setup and familiarize with the simulation environment

- Build the robot model (URDF) for the simulation (optional if you wish to use the existing one)

- Setup the ROS framework for the simulation (Gazebo, Rviz)

- Recommended programming tools: C++, Python, Matlab

- Intermediate presentation:

- Presenting the results of the literature study

- Possibility to ask questions about the theoretical background

- Detailed planning of the next steps

- Define key performance/quality metrics for evaluation:

- Time to reach the desired goal

- Average/mean speed

- Path smoothness

- Obstacle avoidance/distance to obstacles

- Computational requirement

- success rate

- e.t.c

- Assessment and execution:

- Compare the results from both map-based and map-less approaches on the above-defined evaluation metrics.

- Validation:

- Validate both approaches in a real-world scenario using our open-source open-shuttle mobile robot.

- Furthermore, the following optional goals are planned:

- Develop a hybrid approach combining both the map-based and the map-less methods.

- M.Sc. thesis writing

- Research paper writing (optional)

Related Work

[1] Xue, Honghu; Hein, Benedikt; Bakr, Mohamed; Schildbach, Georg; Abel, Bengt; Rueckert, Elmar, “Using Deep Reinforcement Learning with Automatic Curriculum Learning for Mapless Navigation in Intralogistics“, In: Applied Sciences (MDPI), Special Issue on Intelligent Robotics, 2022.

[2] Han Hu; Kaicheng Zhang; Aaron Hao Tan; Michael Ruan; Christopher Agia; Goldie Nejat “Sim-to-Real Pipeline for Deep Reinforcement Learning for Autonomous Robot Navigation in Cluttered Rough Terrain”, IEEE Robotics and Automation Letters ( Volume: 6, Issue: 4, October 2021).

[3] Md. A. K. Niloy; Anika Shama; Ripon K. Chakrabortty; Michael J. Ryan; Faisal R. Badal; Z. Tasneem; Md H. Ahamed; S. I. Mo, “Critical Design and Control Issues of Indoor Autonomous Mobile Robots: A Review”, IEEE Access ( Volume: 9), February 2021.

[4] Ning Wang, Yabiao Wang, Yuming Zhao, Yong Wang and Zhigang Li , “Sim-to-Real: Mapless Navigation for USVs Using Deep Reinforcement Learning”, Journal of Marine Science and Engineering, 2022, 10, 895. https://doi.org/10.3390/jmse10070895Master Thesis

The final master thesis document can be downloaded here.

3D perception and SLAM using geometric and semantic information for mine inspection with quadruped robot

Supervisor: Linus Nwankwo, M.Sc.;

Univ.-Prof. Dr Elmar Rückert

Start date: As soon as possible

Theoretical difficulty: mid

Practical difficulty: high

Abstract

Unlike the traditional mine inspection approach, which is inefficient in terms of time, terrain, and coverage, this project/thesis aims to investigate novel 3D perception and SLAM using geometric and semantic information for real-time mine inspection.

We propose to develop a SLAM approach that takes into account the terrain of the mining site and the sensor characteristics to ensure complete coverage of the environment while minimizing traversal time.

Tentative Work Plan

To achieve our objective, the following concrete tasks will be focused on:

Study the concept of 3D perception and SLAM for mine inspection, as well as algorithm development, system integration and real-world demonstration using Unitree Go1 quadrupedal robot.

- Setup and familiarize with the simulation environment:

- Build the robot model (URDF) for the simulation (optional if you wish to use the existing one)

- Setup the ROS framework for the simulation (Gazebo, Rviz)

- Recommended programming tools: C++, Python, Matlab

- Develop a novel SLAM system for the quadrupedal robot to navigate, map and interact with challenging real-world environments:

2D/3D mapping in complex indoor/outdoor environments

Localization using either Monte Carlo or extended Kalman filter

Complete coverage path-planning

- Intermediate presentation:

- Presenting the results of the literature study

- Possibility to ask questions about the theoretical background

- Detailed planning of the next steps

Implementation:

Simulate the achieved results in a virtual environment (Gazebo, Rviz, etc.)

Real-time testing on Unitree Go1 quadrupedal robot.

- Evaluate the performance in various challenging real-world environments, including outdoor terrains, urban environments, and indoor environments with complex structures.

- M.Sc. thesis or research paper writing (optional)

Related Work

Localization and Mapping’, http://ais.informatik.uni-freiburg.de/teaching/ss12/robotics/slides/12-slam.pdf[2] V.Barrile, G. Candela, A. Fotia, ‘Point cloud segmentation using image processing techniques for structural analysis’, The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Volume XLII-2/W11, 2019 [3] Łukasz Sobczak , Katarzyna Filus , Adam Domanski and Joanna Domanska, ‘LiDAR Point Cloud Generation for SLAM Algorithm Evaluation’, Sensors 2021, 21, 3313. https://doi.org/10.3390/ s21103313.

M.Sc. Project – Mekonoude Etienne Kpanou: Mobile robot teleoperation based on human finger direction and vision

Supervisors: Univ. -Prof. Dr. Elmar Rückert and Nwankwo Linus M.Sc.

Theoretical difficulty: mid

Practical difficulty: mid

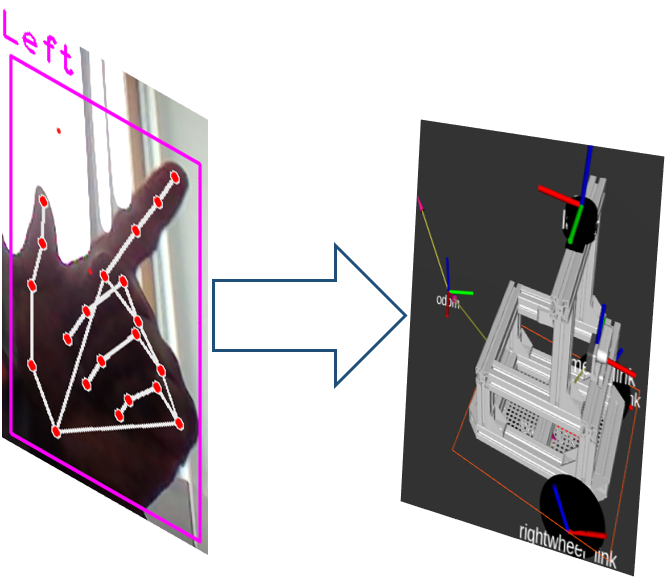

Naturally, humans have the ability to give directions (go front, back, right, left etc) by merely pointing fingers towards the direction in question. This can be done effortlessly without saying a word. However, mimicking or training a mobile robot to understand such gestures is still today an open problem to solve.

In the context of this thesis, we propose finger-pose based mobile robot navigation to maximize natural human-robot interaction. This could be achieved by observing the human fingers’ Cartesian pose from an

RGB-D camera and translating it to the robot’s linear and angular velocity commands. For this, we will leverage computer vision algorithms and the ROS framework to achieve the objectives.

The prerequisite for this project are basic understanding of Python or C++ programming, OpenCV and ROS.

Tentative work plan

In the course of this thesis, the following concrete tasks will be focused on:

- study the concept of visual navigation of mobile robots

- develop a hand detection and tracking algorithm in Python or C++

- apply the developed algorithm to navigate a simulated mobile robot

- real-time experimentation

- thesis writing

References

- Shuang Li, Jiaxi Jiang, Philipp Ruppel, Hongzhuo Liang, Xiaojian Ma,

Norman Hendrich, Fuchun Sun, Jianwei Zhang, “A Mobile Robot Hand-Arm Teleoperation System by Vision and IMU“, IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), October 25-29, 2020, Las Vegas, NV, USA.

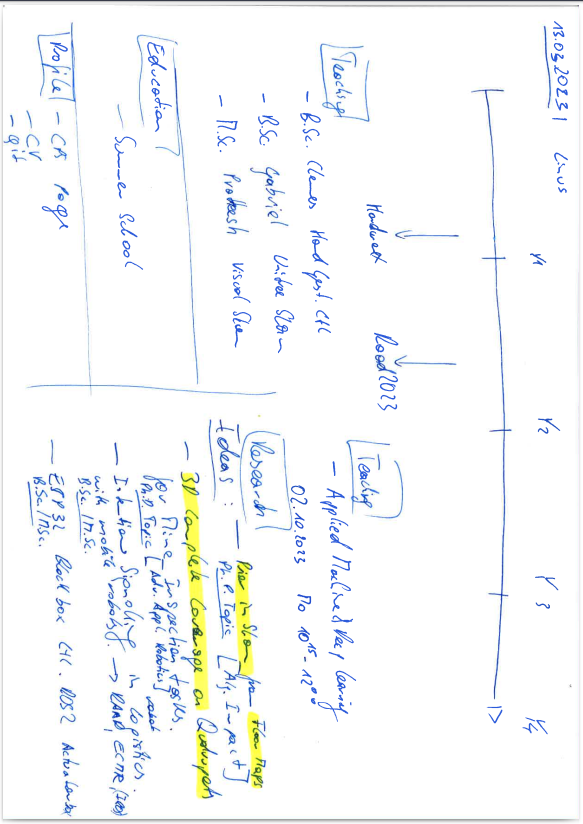

13.03.2023 – Innovative Research Discussion

Meeting notes on the 13th of March, 2023

Location: Chair of CPS

Date & Time: 13th March, 2023, 11:45 pm to 12:45 pm

Participants: Univ.-Prof. Dr. Elmar Rueckert, Linus Nwankwo, M.Sc.

Agenda

- General Discussion

- Discussion on research progress

- Next action

General Discussion

- The applied machine and deep learning course start on 02.10.2023.

- Study the publication [1] for the next work.

Do next

- 3D complete coverage on quadruped robot for mine inspection tasks.

- Prior in SLAM from architectural floor plans.

Reference

Gabriel Brinkmann

Bachelor Thesis Student at the Montanuniversität Leoben

Short bio: Gabriel is a Bachelor Student in Mechanical Engineering at Montanuniversität Leoben and, as of March 2023, is writing his Bachelors thesis at the Chair of Cyber-Physical Systems.

Research Interests

- Robotics

Thesis

Contact

Gabriel Brinkmann

Master Thesis Student at the Chair of Cyber-Physical-Systems

Montanuniversität Leoben

Franz-Josef-Straße 18,

8700 Leoben, Austria

Email: