Meeting notes on the 4th of October, 2022

Location: Chair of CPS

Date & Time: 7th October, 2022, 09:15 am to 10:25 pm

Participants: Univ.-Prof. Dr. Elmar Rueckert, Linus Nwankwo, M.Sc.

Agenda

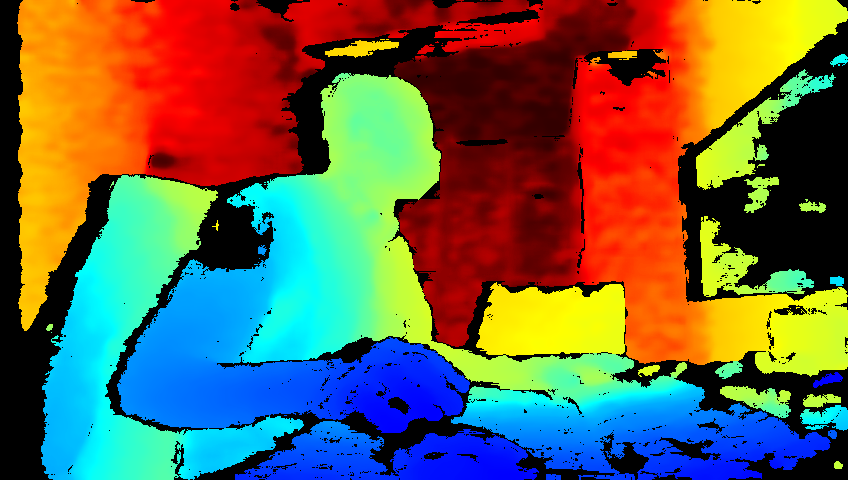

- Discussion on ROS-Mobile Control

- Discussion on ODrive torque control

ROS-Mobile and ODrive torque control

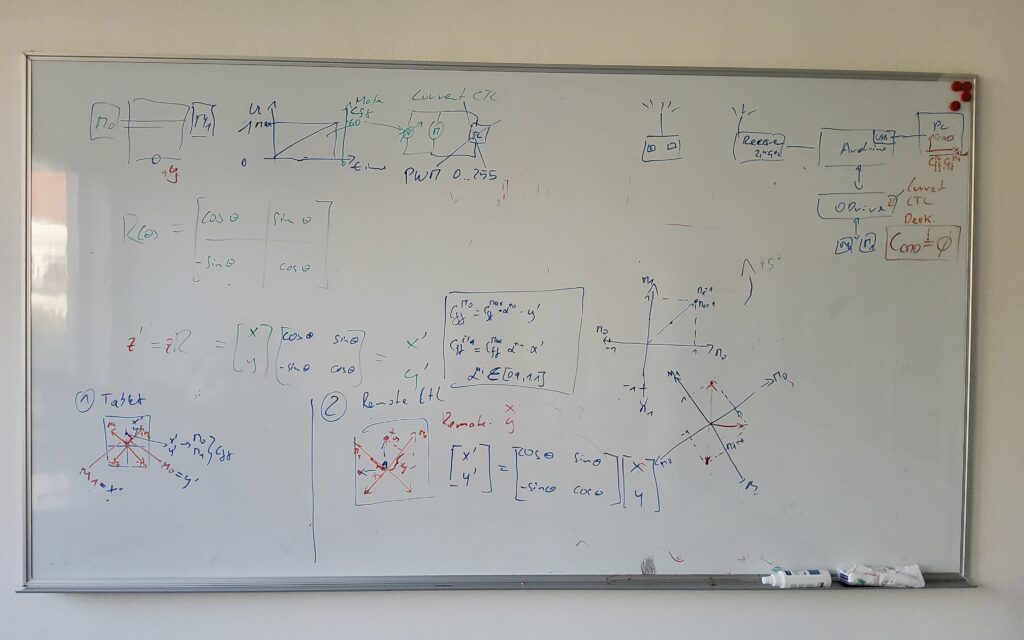

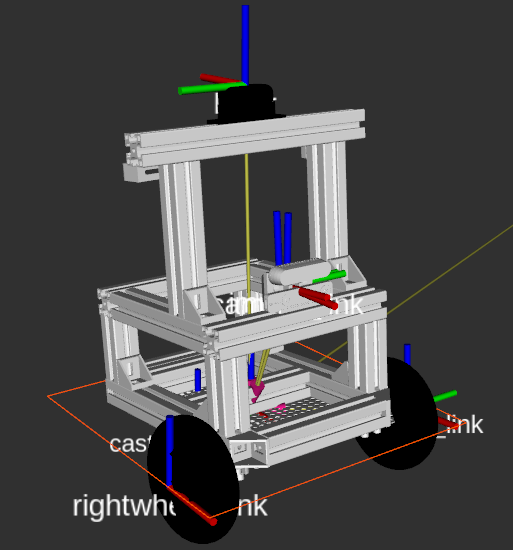

- Re-implement the o2s control approach to accommodate the information in the attached figure.

- Write the Arduino code taking into account the rotation matrices

- Implement the open-loop torque control approach