3 positions for fully employed University Assistant’s at the Chair of Cyber-Physical-Systems on the Department of Product Engineering at the earliest possible date or beginning on 15th of June in a 4-year term of employment. Salary Group B1 to Uni-KV, monthly minimum charge excl. SZ.: € 2.971,50 for 40 hours per week (14 times a year), actual classification takes place according to accountable activity-specific previous experience.

The following doctoral theses are available:

Fundamentals of learning methods for autonomous systems.

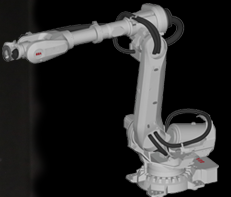

The goal is to make autonomous learning systems such as industrial robot arms, humanoid or mobile robots suitable for everyday use. To achieve this, large amounts of data must be processed in a few milliseconds (Big Data for Control) and efficient learning methods must be developed. In addition, safe human-machine interaction must be ensured when dealing with the autonomous systems. For this purpose, novel stochastic motion learning methods and model representations for compliant humanoid robots will be developed.

Fundamentals of stochastic neural networks.

Modern deep neural networks

can process large amounts of data and calculate complex predictions. These

methods are also increasingly used in autonomous systems. A major challenge

here is to integrate measurement and model uncertainties in the calculations

and predictions. For this purpose, novel neural networks will be developed that

are based on stochastic computations that enrich predictions with uncertainty

estimations. The neural networks will be used in learning tasks with robotic

arms.

Robot learning with embedded systems.

Modern robot systems are equipped with complex sensors and actuators. However, they lack the necessary control and learning methods to solve versatile tasks. The goal of this thesis is to develop novel AI-based sensor systems and to integrate them into autonomous systems. The developed algorithms will be applied in mobile computers and tested on realistic industrial applications with robotic arms.

What we offer

The opportunity to work on research ideas of exciting modern topics in artificial intelligence and robotics, to develop your own ideas, to be part of a young and newly formed team, to go on international research trips, and to receive targeted career guidance for a successful scientific career.

Job requirements

Completed

master’s degree in computer science, physics, telematics, statistics,

mathematics, electrical engineering, mechanics, robotics or an equivalent

education in the sense of the desired qualification. Willingness and ability

for scientific work in research including publications with the possibility to

write a dissertation.

Desired additional qualifications

Programming experience in one of the languages C, C++, C#, JAVA, Matlab, Python or similar. Experience with Linux or ROS is advantageous. Good English skills and willingness to travel for research and to give technical presentations.

Application

Application deadline: June 30th, 2021

Online Application via: Montanuniversität Leoben Webpage

The Montanuniversitaet Leoben intends to increase the number of women on its faculty and therefore specifically invites applications by women. Among equally qualified applicants women will receive preferential consideration.